ByteDance Seed not too long ago dropped a analysis that may change how we construct reasoning AI. For years, devs and AI researchers have struggled to ‘cold-start’ Massive Language Fashions (LLMs) into Lengthy Chain-of-Thought (Lengthy CoT) fashions. Most fashions lose their manner or fail to switch patterns throughout multi-step reasoning.

The ByteDance group found the issue: we have now been taking a look at reasoning the mistaken manner. As a substitute of simply phrases or nodes, efficient AI reasoning has a secure, molecular-like construction.

The three ‘Chemical Bonds’ of Thought

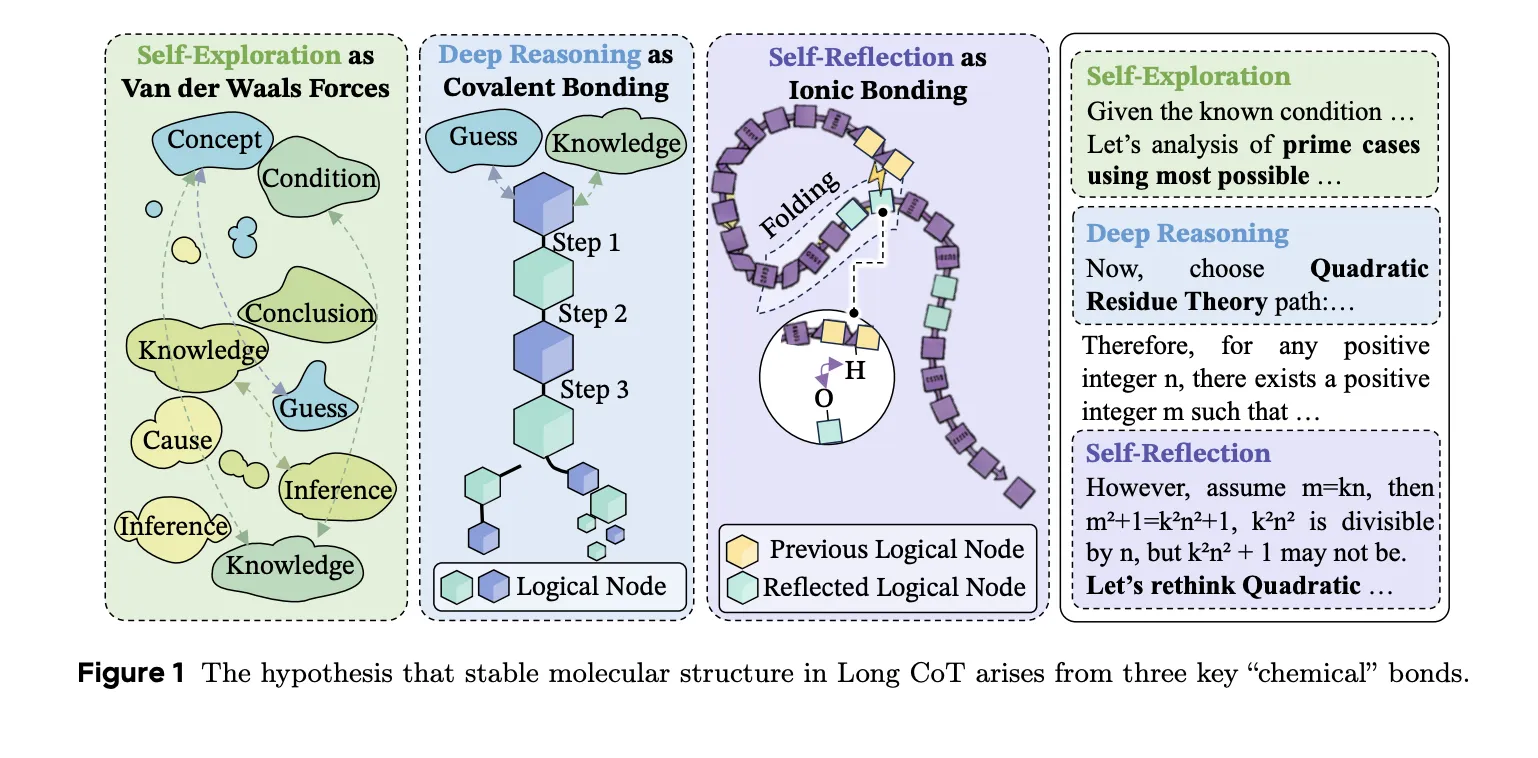

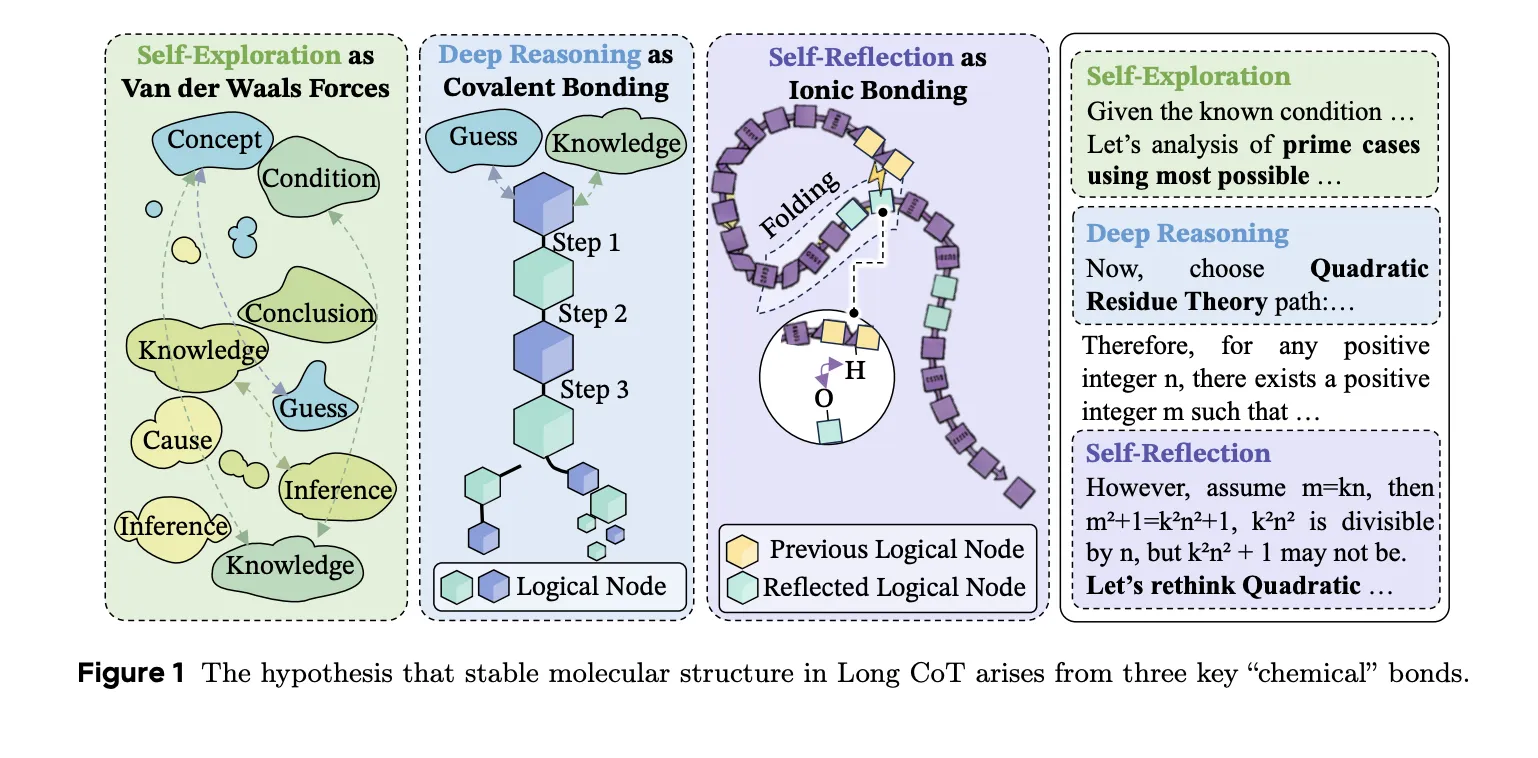

The researchers posit that high-quality reasoning trajectories are held collectively by 3 interplay sorts. These mirror the forces present in natural chemistry:

- Deep Reasoning as Covalent Bonds: This kinds the first ‘bone’ of the thought course of. It encodes robust logical dependencies the place Step A should justify Step B. Breaking this bond destabilizes the whole reply.

- Self-Reflection as Hydrogen Bonds: This acts as a stabilizer. Simply as proteins achieve stability when chains fold, reasoning stabilizes when later steps (like Step 100) revise or reinforce earlier premises (like Step 10). Of their exams, 81.72% of reflection steps efficiently reconnected to beforehand shaped clusters.

- Self-Exploration as Van der Waals Forces: These are weak bridges between distant clusters of logic. They permit the mannequin to probe new potentialities or various hypotheses earlier than imposing stronger logical constraints.

Why ‘Wait, Let Me Suppose’ Isn’t Sufficient

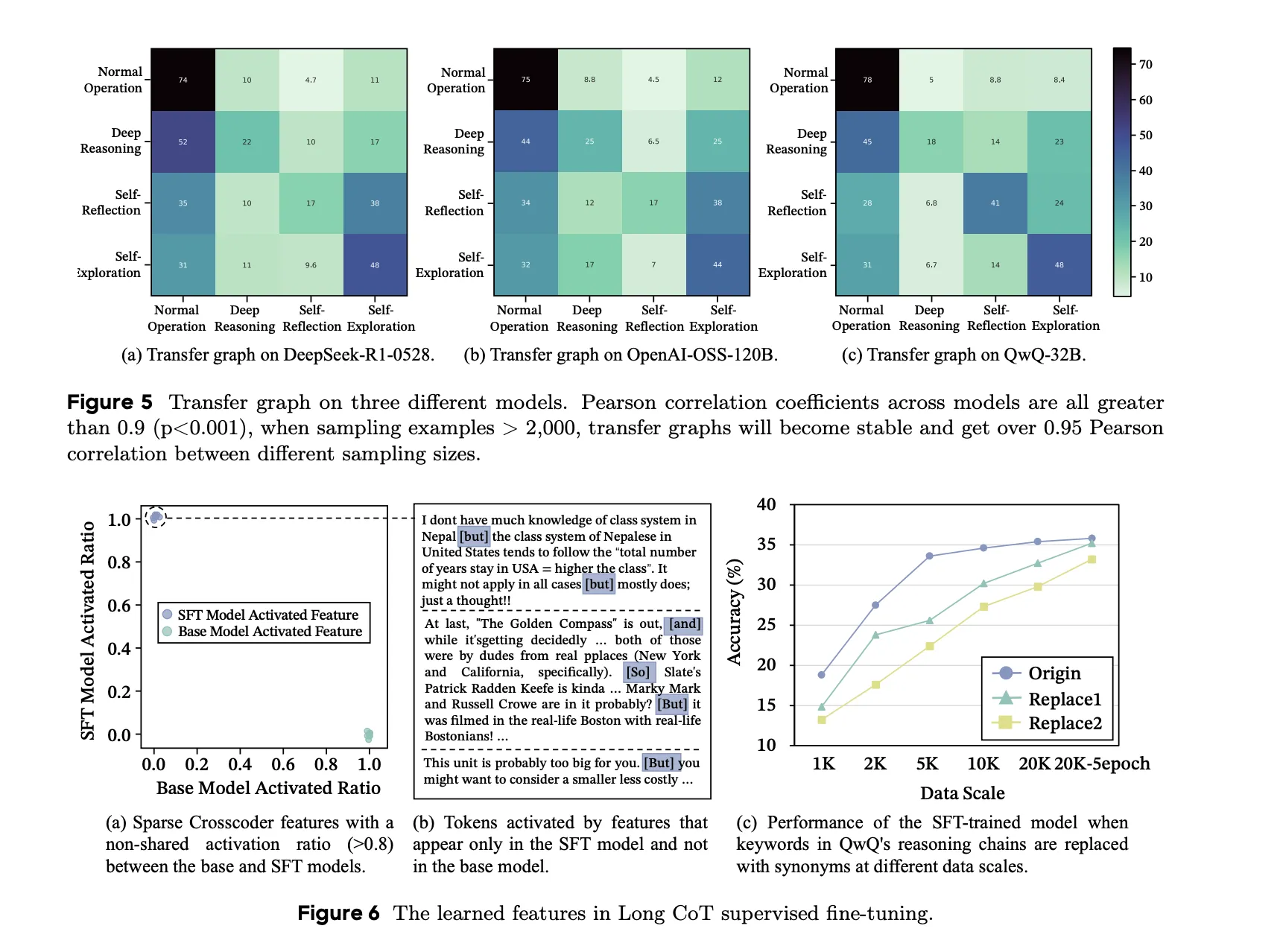

Most AI devs/researchers attempt to repair reasoning by coaching fashions to mimic key phrases like ‘wait’ or ‘perhaps’. ByteDance group proved that fashions truly study the underlying reasoning habits, not the floor phrases.

The analysis group identifies a phenomenon known as Semantic Isomers. These are reasoning chains that clear up the identical job and use the identical ideas however differ in how their logical ‘bonds’ are distributed.

Key findings embody:

- Imitation Fails: Fantastic-tuning on human-annotated traces or utilizing In-Context Studying (ICL) from weak fashions fails to construct secure Lengthy CoT buildings.

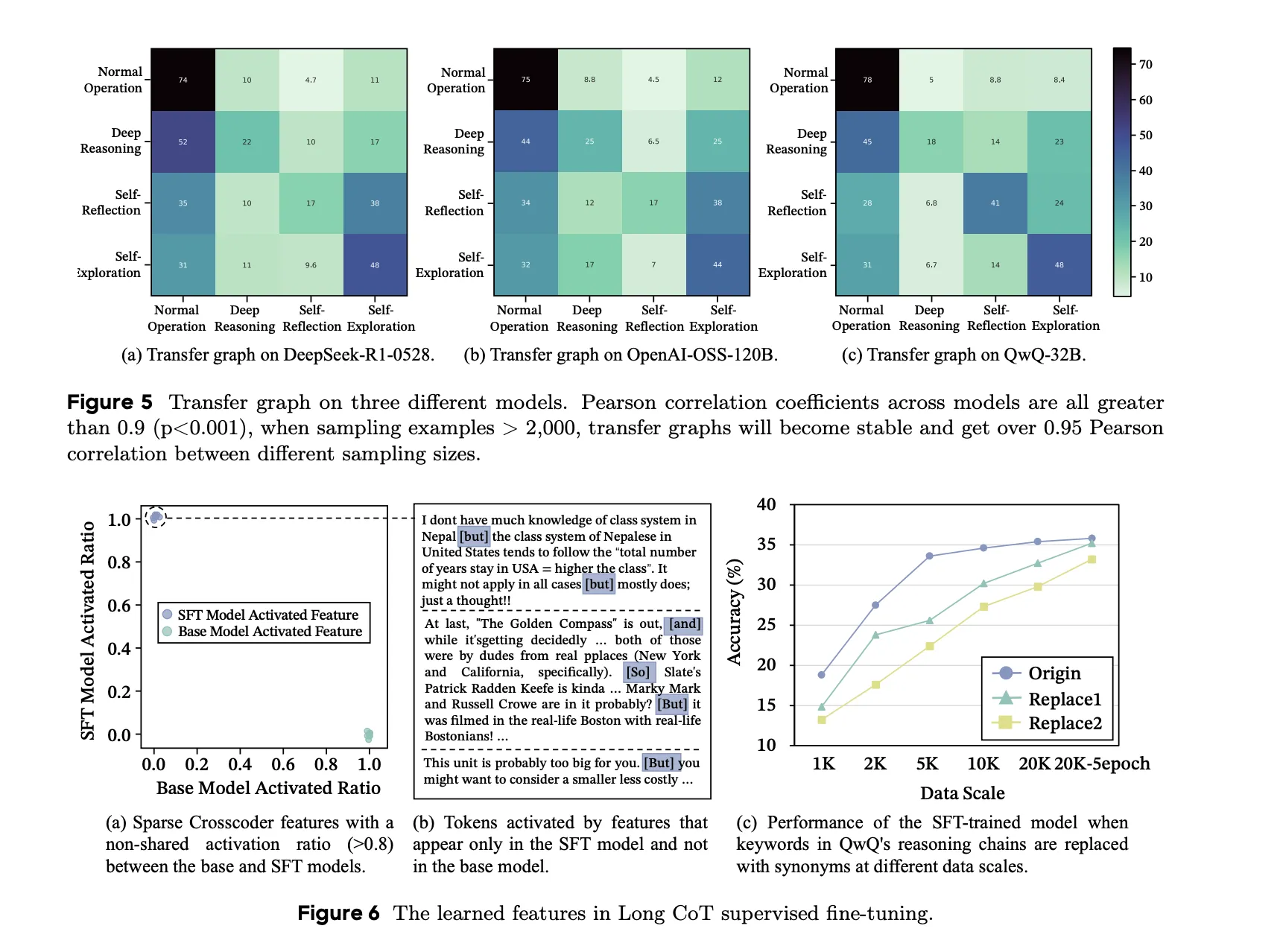

- Structural Battle: Mixing reasoning information from completely different robust academics (like DeepSeek-R1 and OpenAI-OSS) truly destabilizes the mannequin. Even when the information is analogous, the completely different “molecular” buildings trigger structural chaos and drop efficiency.

- Data Circulation: Not like people, who’ve uniform info achieve, robust reasoning fashions exhibit metacognitive oscillation. They alternate between high-entropy exploration and secure convergent validation.

MOLE-SYN: The Synthesis Technique

To repair these points, ByteDance group launched MOLE-SYN. It is a ‘distribution-transfer-graph’ technique. As a substitute of straight copying a trainer’s textual content, it transfers the behavioral construction to the coed mannequin.

It really works by estimating a habits transition graph from robust fashions and guiding a less expensive mannequin to synthesize its personal efficient Lengthy CoT buildings. This decoupling of construction from floor textual content yields constant features throughout 6 main benchmarks, together with GSM8K, MATH-500, and OlymBench.

Defending the ‘Thought Molecule‘

This analysis additionally sheds gentle on how non-public AI corporations defend their fashions. Exposing full reasoning traces permits others to clone the mannequin’s inner procedures.

ByteDance group discovered that summarization and reasoning compression are efficient defenses. By decreasing the token depend—usually by greater than 45%—corporations disrupt the reasoning bond distributions. This creates a spot between what the mannequin outputs and its inner ‘error-bounded transitions,’ making it a lot tougher to distill the mannequin’s capabilities.

Key Takeaways

- Reasoning as ‘Molecular’ Bonds: Efficient Lengthy Chain-of-Thought (Lengthy CoT) is outlined by three particular ‘chemical’ bonds: Deep Reasoning (covalent-like) kinds the logical spine, Self-Reflection (hydrogen-bond-like) gives world stability by means of logical folding, and Self-Exploration (van der Waals-like) bridges distant semantic ideas.

- Conduct Over Key phrases: Fashions internalize underlying reasoning buildings and transition distributions quite than simply surface-level lexical cues like ‘wait’ or ‘perhaps’. Changing key phrases with synonyms doesn’t considerably impression efficiency, proving that true reasoning depth comes from realized behavioral motifs.

- The ‘Semantic Isomer’ Battle: Combining heterogeneous reasoning information from completely different robust fashions (e.g., DeepSeek-R1 and OpenAI-OSS) can set off ‘structural chaos’. Even when information sources are statistically comparable, incompatible behavioral distributions can break logical coherence and degrade mannequin efficiency.

- MOLE-SYN Methodology: This ‘distribution-transfer-graph’ framework permits fashions to synthesize efficient Lengthy CoT buildings from scratch utilizing cheaper instruction LLMs. By transferring the behavioral transition graph as an alternative of direct textual content, MOLE-SYN achieves efficiency near costly distillation whereas stabilizing Reinforcement Studying (RL).

- Safety through Structural Disruption: Non-public LLMs can defend their inner reasoning processes by means of summarization and compression. Lowering token depend by roughly 45% or extra successfully ‘breaks’ the bond distributions, making it considerably tougher for unauthorized fashions to clone inner reasoning procedures through distillation.

Try the Paper. Additionally, be happy to observe us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you’ll be able to be a part of us on telegram as nicely.