Google has formally shifted the Gemini period into excessive gear with the discharge of Gemini 3.1 Professional, the primary model replace within the Gemini 3 collection. This launch is not only a minor patch; it’s a focused strike on the ‘agentic’ AI market, specializing in reasoning stability, software program engineering, and tool-use reliability.

For devs, this replace indicators a transition. We’re shifting from fashions that merely ‘chat’ to fashions that ‘work.’ Gemini 3.1 Professional is designed to be the core engine for autonomous brokers that may navigate file programs, execute code, and cause via scientific issues with a hit price that now rivals—and in some circumstances exceeds—the business’s most elite frontier fashions.

Large Context, Exact Output

One of the vital quick technical upgrades is the dealing with of scale. Gemini 3.1 Professional Preview maintains an enormous 1M token enter context window. To place this in perspective for software program engineers: now you can feed the mannequin a whole medium-sized code repository, and it’ll have sufficient ‘reminiscence’ to grasp the cross-file dependencies with out dropping the plot.

Nevertheless, the true information is the 65k token output restrict. This 65k window is a major bounce for builders constructing long-form mills. Whether or not you might be producing a 100-page technical handbook or a fancy, multi-module Python software, the mannequin can now end the job in a single flip with out hitting an abrupt ‘max token’ wall.

Doubling Down on Reasoning

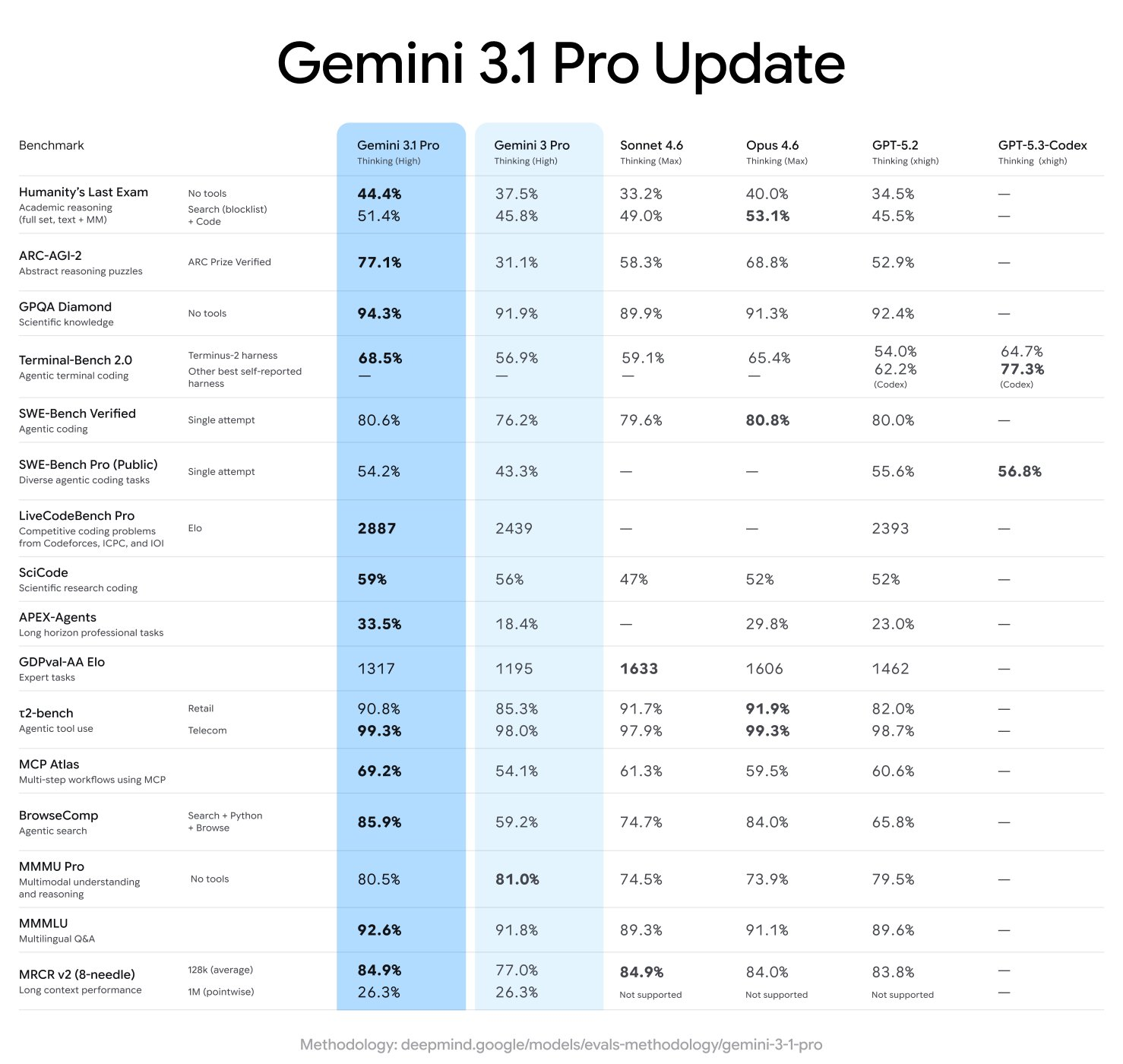

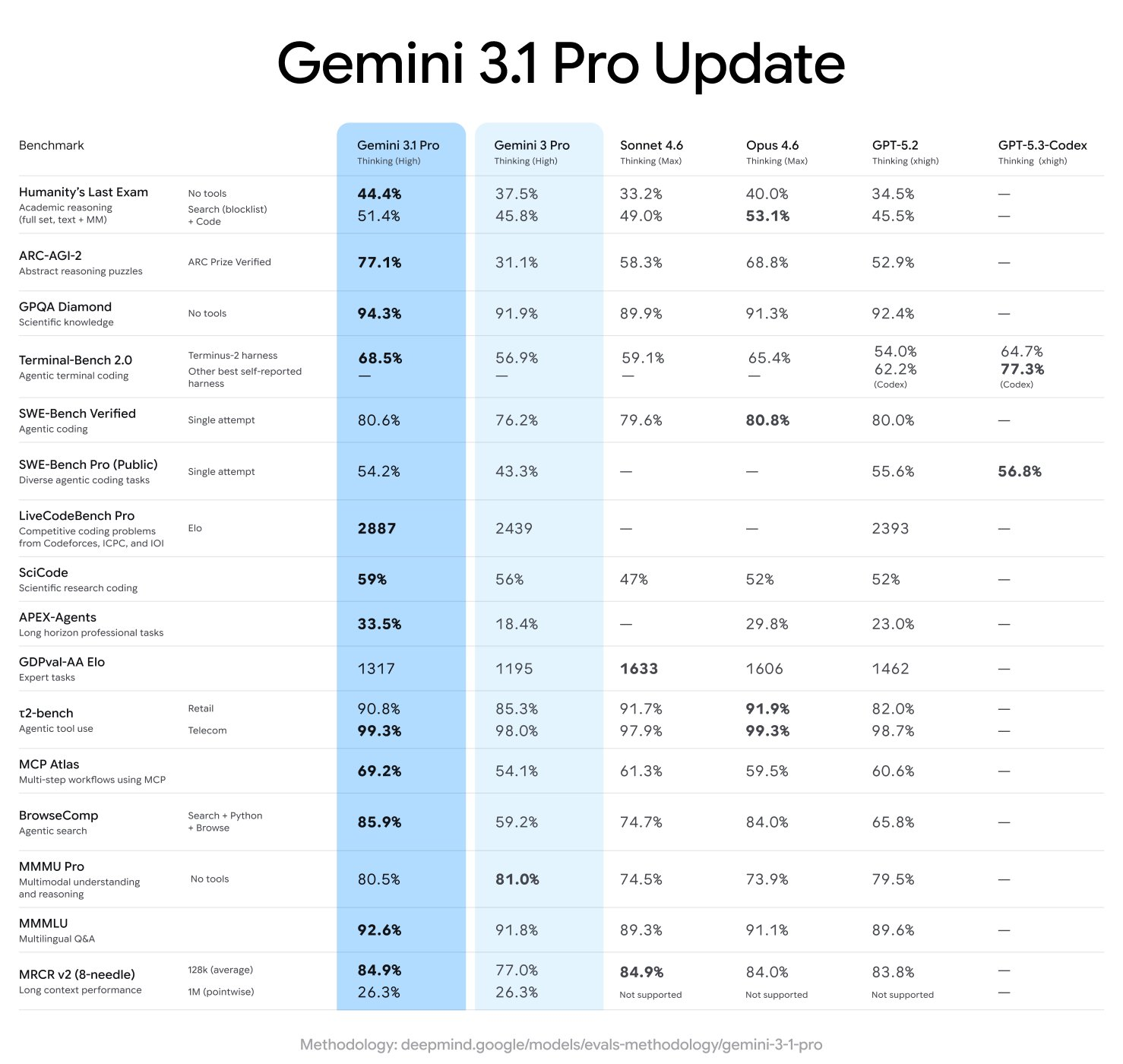

If Gemini 3.0 was about introducing ‘Deep Pondering,’ Gemini 3.1 is about making that considering environment friendly. The efficiency jumps on rigorous benchmarks are notable:

| Benchmark | Rating | What it measures |

| ARC-AGI-2 | 77.1% | Potential to resolve totally new logic patterns |

| GPQA Diamond | 94.1% | Graduate-level scientific reasoning |

| SciCode | 58.9% | Python programming for scientific computing |

| Terminal-Bench Onerous | 53.8% | Agentic coding and terminal use |

| Humanity’s Final Examination (HLE) | 44.7% | Reasoning in opposition to near-human limits |

The 77.1% on ARC-AGI-2 is the headline determine right here. Google staff claims this represents greater than double the reasoning efficiency of the unique Gemini 3 Professional. This implies the mannequin is far much less prone to depend on sample matching from its coaching information and is extra able to ‘figuring it out’ when confronted with a novel edge case in a dataset.

The Agentic Toolkit: Customized Instruments and ‘Antigravity‘

Google staff is making a transparent play for the developer’s terminal. Together with the principle mannequin, they launched a specialised endpoint: gemini-3.1-pro-preview-customtools.

This endpoint is optimized for builders who combine bash instructions with customized capabilities. In earlier variations, fashions typically struggled to prioritize which software to make use of, typically hallucinating a search when a neighborhood file learn would have sufficed. The customtools variant is particularly tuned to prioritize instruments like view_file or search_code, making it a extra dependable spine for autonomous coding brokers.

This launch additionally integrates deeply with Google Antigravity, the corporate’s new agentic growth platform. Builders can now make the most of a brand new ‘medium’ considering stage. This lets you toggle the ‘reasoning price range’—utilizing high-depth considering for advanced debugging whereas dropping to medium or low for traditional API calls to save lots of on latency and price.

API Breaking Adjustments and New File Strategies

For these already constructing on the Gemini API, there’s a small however crucial breaking change. Within the Interactions API v1beta, the sphere total_reasoning_tokens has been renamed to total_thought_tokens. This variation aligns with the ‘thought signatures’ launched within the Gemini 3 household—encrypted representations of the mannequin’s inner reasoning that should be handed again to the mannequin to keep up context in multi-turn agentic workflows.

The mannequin’s urge for food for information has additionally grown. Key updates to file dealing with embody:

- 100MB File Restrict: The earlier 20MB cap for API uploads has been quintupled to 100MB.

- Direct YouTube Assist: Now you can move a YouTube URL straight as a media supply. The mannequin ‘watches’ the video by way of the URL fairly than requiring a handbook add.

- Cloud Integration: Assist for Cloud Storage buckets and personal database pre-signed URLs as direct information sources.

The Economics of Intelligence

Pricing for Gemini 3.1 Professional Preview stays aggressive. For prompts underneath 200k tokens, enter prices are $2 per 1 million tokens, and output is $12 per 1 million. For contexts exceeding 200k, the value scales to $4 enter and $18 output.

When in comparison with rivals like Claude Opus 4.6 or GPT-5.2, Google staff is positioning Gemini 3.1 Professional because the ‘effectivity chief.’ In line with information from Synthetic Evaluation, Gemini 3.1 Professional now holds the highest spot on their Intelligence Index whereas costing roughly half as a lot to run as its nearest frontier friends.

Key Takeaways

- Large 1M/65K Context Window: The mannequin maintains a 1M token enter window for large-scale information and repositories, whereas considerably upgrading the output restrict to 65k tokens for long-form code and doc era.

- A Leap in Logic and Reasoning: Efficiency on the ARC-AGI-2 benchmark reached 77.1%, representing greater than double the reasoning functionality of earlier variations. It additionally achieved a 94.1% on GPQA Diamond for graduate-level science duties.

- Devoted Agentic Endpoints: Google staff launched a specialised

gemini-3.1-pro-preview-customtoolsendpoint. It’s particularly optimized to prioritize bash instructions and system instruments (likeview_fileandsearch_code) for extra dependable autonomous brokers. - API Breaking Change: Builders should replace their codebases as the sphere

total_reasoning_tokenshas been renamed tototal_thought_tokenswithin the v1beta Interactions API to raised align with the mannequin’s inner “thought” processing. - Enhanced File and Media Dealing with: The API file dimension restrict has elevated from 20MB to 100MB. Moreover, builders can now move YouTube URLs straight into the immediate, permitting the mannequin to investigate video content material without having to obtain or re-upload recordsdata.

Take a look at the Technical particulars and Attempt it right here. Additionally, be at liberty to observe us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you’ll be able to be part of us on telegram as effectively.