Google Analysis has unveiled a groundbreaking methodology for fine-tuning massive language fashions (LLMs) that slashes the quantity of required coaching knowledge by as much as 10,000x, whereas sustaining and even bettering mannequin high quality. This strategy facilities on energetic studying and focusing skilled labeling efforts on probably the most informative examples—the “boundary circumstances” the place mannequin uncertainty peaks.

The Conventional Bottleneck

Fantastic-tuning LLMs for duties demanding deep contextual and cultural understanding—like advert content material security or moderation—has usually required huge, high-quality labeled datasets. Most knowledge is benign, that means that for coverage violation detection, solely a small fraction of examples matter, driving up the price and complexity of knowledge curation. Commonplace strategies additionally battle to maintain up when insurance policies or problematic patterns shift, necessitating costly retraining.

Google’s Energetic Studying Breakthrough

How It Works:

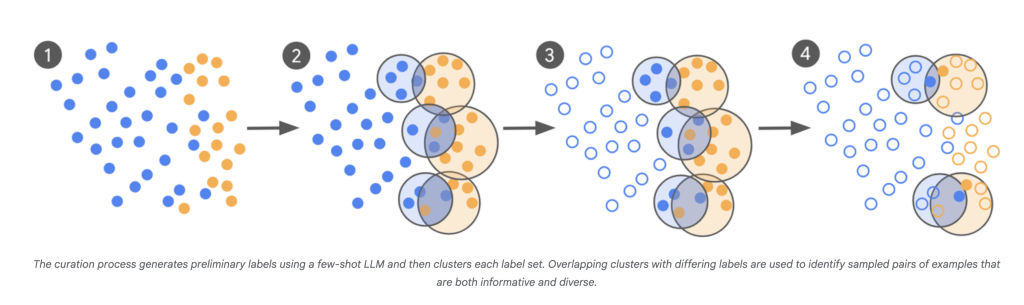

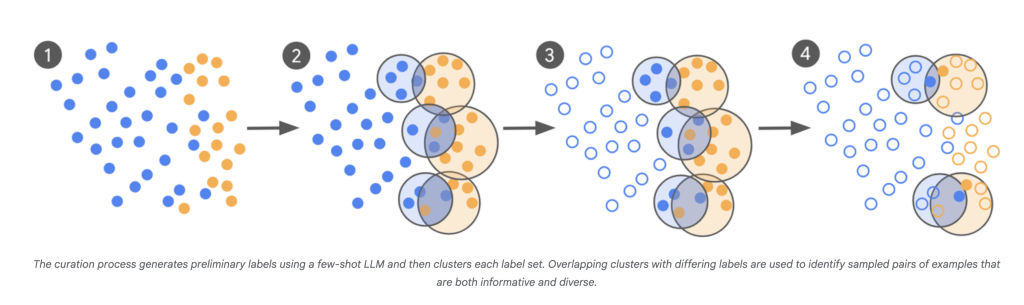

- LLM-as-Scout: The LLM is used to scan an unlimited corpus (a whole lot of billions of examples) and determine circumstances it’s least sure about.

- Focused Skilled Labeling: As a substitute of labeling hundreds of random examples, human specialists solely annotate these borderline, complicated gadgets.

- Iterative Curation: This course of repeats, with every batch of latest “problematic” examples knowledgeable by the newest mannequin’s confusion factors.

- Speedy Convergence: Fashions are fine-tuned in a number of rounds, and the iteration continues till the mannequin’s output aligns carefully with skilled judgment—measured by Cohen’s Kappa, which compares settlement between annotators past probability.

Impression:

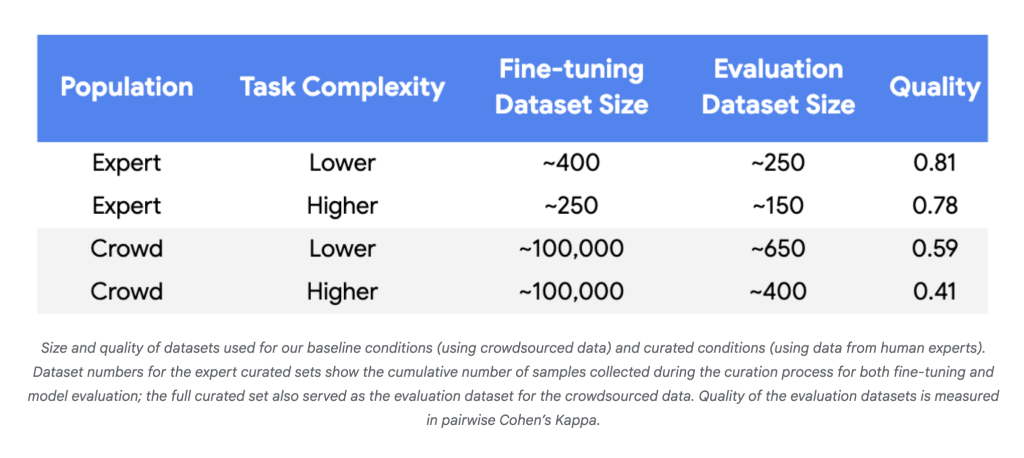

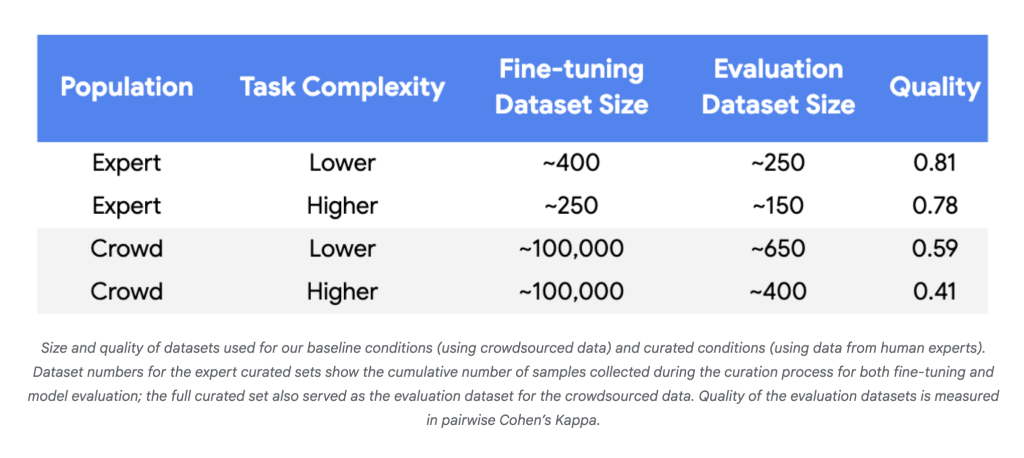

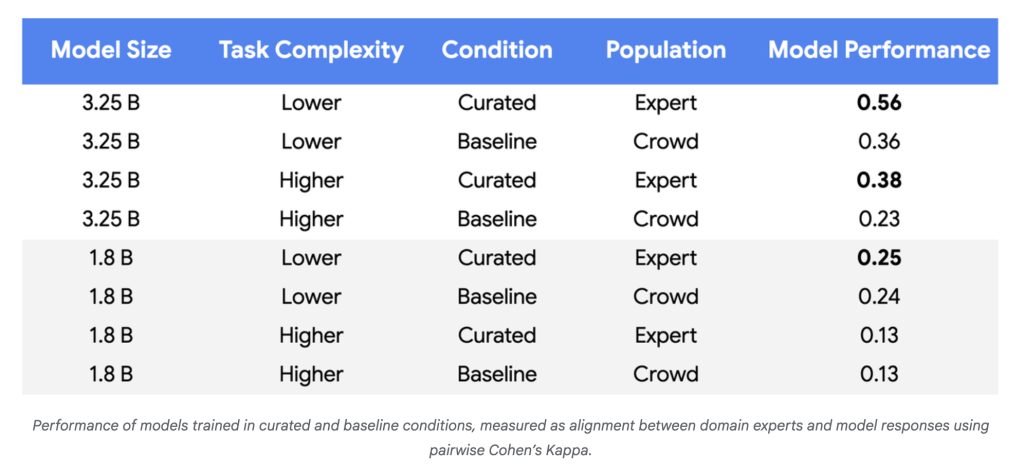

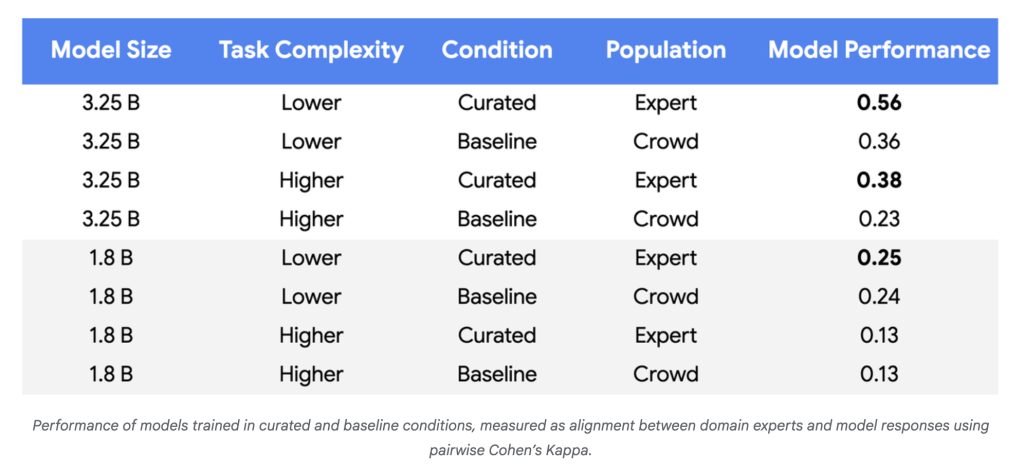

- Knowledge Wants Plummet: In experiments with Gemini Nano-1 and Nano-2 fashions, alignment with human specialists reached parity or higher utilizing 250–450 well-chosen examples fairly than ~100,000 random crowdsourced labels—a discount of three to 4 orders of magnitude.

- Mannequin High quality Rises: For extra complicated duties and bigger fashions, efficiency enhancements reached 55–65% over baseline, demonstrating extra dependable alignment with coverage specialists.

- Label Effectivity: For dependable positive factors utilizing tiny datasets, excessive label high quality was constantly essential (Cohen’s Kappa > 0.8).

Why It Issues

This strategy flips the standard paradigm. Moderately than drowning fashions in huge swimming pools of noisy, redundant knowledge, it leverages each LLMs’ potential to determine ambiguous circumstances and the area experience of human annotators the place their enter is most respected. The advantages are profound:

- Value Discount: Vastly fewer examples to label, dramatically decreasing labor and capital expenditure.

- Quicker Updates: The flexibility to retrain fashions on a handful of examples makes adaptation to new abuse patterns, coverage adjustments, or area shifts speedy and possible.

- Societal Impression: Enhanced capability for contextual and cultural understanding will increase the security and reliability of automated programs dealing with delicate content material.

In Abstract

Google’s new methodology permits LLM fine-tuning on complicated, evolving duties with simply a whole lot (not a whole lot of hundreds) of focused, high-fidelity labels—ushering in far leaner, extra agile, and cost-effective mannequin growth.