AI brokers are not simply chatbots that spit out solutions. They’re evolving into complicated methods that may motive step-by-step, name APIs, replace dashboards, and collaborate with people in actual time. However this raises a key query: how ought to brokers speak to consumer interfaces?

Advert-hoc sockets and customized APIs can work for prototypes, however they don’t scale. Every undertaking reinvents the best way to stream outputs, handle instrument calls, or deal with consumer corrections. That’s precisely the hole the AG-UI (Agent–Consumer Interplay) Protocol goals to fill.

What AG-UI Brings to the Desk

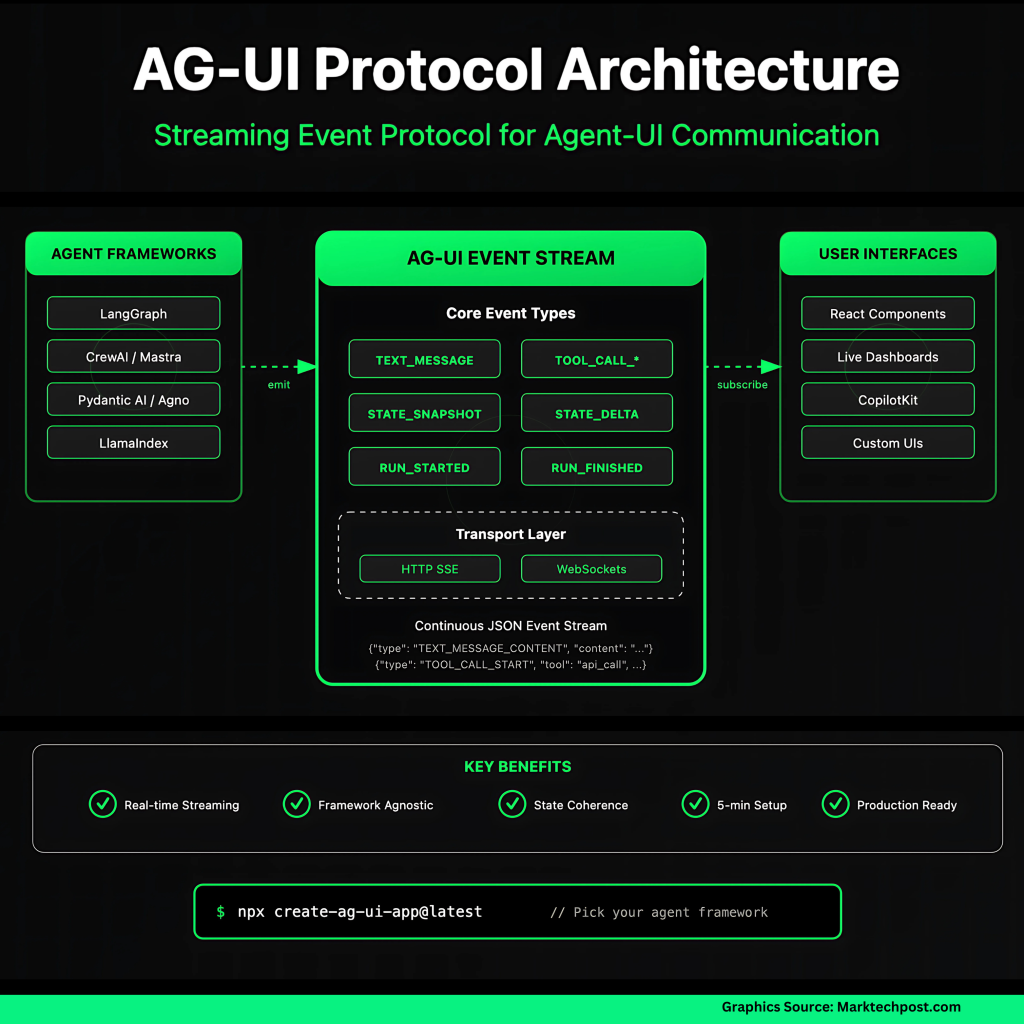

AG-UI is a streaming occasion protocol designed for agent-to-UI communication. As an alternative of returning a single blob of textual content, brokers emit a steady sequence of JSON occasions:

- TEXT_MESSAGE_CONTENT for streaming responses token by token.

- TOOL_CALL_START / ARGS / END for exterior operate calls.

- STATE_SNAPSHOT and STATE_DELTA for maintaining UI state in sync with the backend.

- Lifecycle occasions (RUN_STARTED, RUN_FINISHED) to border every interplay.

All of this flows over normal transports like HTTP SSE or WebSockets, so builders don’t should construct customized protocols. The frontend subscribes as soon as and might render partial outcomes, replace charts, and even ship consumer corrections mid-run.

This design makes AG-UI greater than a messaging layer—it’s a contract between brokers and UIs. Backend frameworks can evolve, UIs can change, however so long as they converse AG-UI, every thing stays interoperable.

First-Celebration and Accomplice Integrations

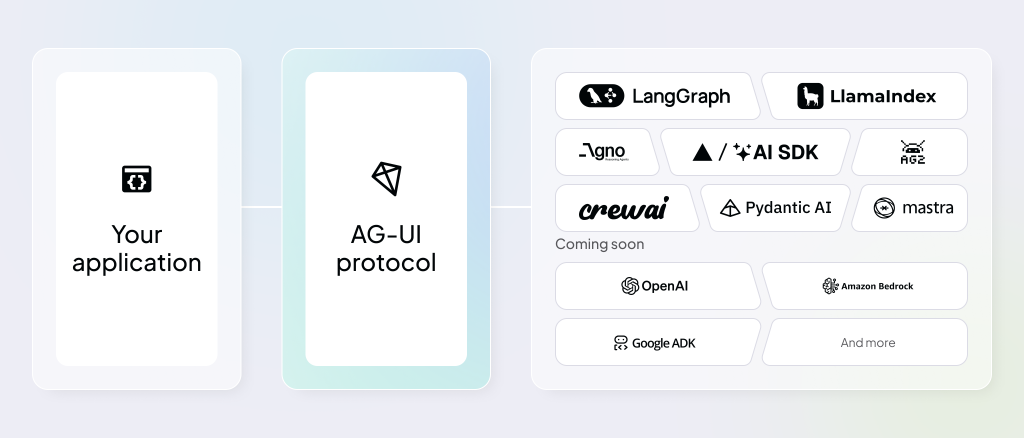

One motive AG-UI is gaining traction is its breadth of supported integrations. As an alternative of leaving builders to wire every thing manually, many agent frameworks already ship with AG-UI help.

- Mastra (TypeScript): Native AG-UI help with sturdy typing, ultimate for finance and data-driven copilots.

- LangGraph: AG-UI built-in into orchestration workflows so each node emits structured occasions.

- CrewAI: Multi-agent coordination uncovered to UIs by way of AG-UI, letting customers comply with and information “agent crews.”

- Agno: Full-stack multi-agent methods with AG-UI-ready backends for dashboards and ops instruments.

- LlamaIndex: Provides interactive information retrieval workflows with stay proof streaming to UIs.

- Pydantic AI: Python SDK with AG-UI baked in, plus instance apps just like the AG-UI Dojo.

- CopilotKit: Frontend toolkit providing React parts that subscribe to AG-UI streams.

Different integrations are in progress—like AWS Bedrock Brokers, Google ADK, and Cloudflare Brokers—which is able to make AG-UI accessible on main cloud platforms. Language SDKs are additionally increasing: Kotlin help is full, whereas .NET, Go, Rust, Nim, and Java are in growth.

Actual-World Use Instances

Healthcare, finance, and analytics groups use AG-UI to show vital information streams into stay, context-rich interfaces: clinicians see affected person vitals replace with out web page reloads, inventory merchants set off a stock-analysis agent and watch outcomes stream inline, and analysts view a LangGraph-powered dashboard that visualizes charting plans token by token because the agent causes.

Past information show, AG-UI simplifies workflow automation. Widespread patterns—information migration, analysis summarization, form-filling—are diminished to a single SSE occasion stream as an alternative of customized sockets or polling loops. As a result of brokers emit solely STATE_DELTA patches, the UI refreshes simply the items that modified, chopping bandwidth and eliminating jarring reloads. The identical mechanism powers 24/7 customer-support bots that present typing indicators, tool-call progress, and remaining solutions inside one chat window, maintaining customers engaged all through the interplay.

For builders, the protocol allows code-assistants and multi-agent purposes with minimal glue code. Experiences that mirror GitHub Copilot—real-time ideas streaming into editors—are constructed by merely listening to AG-UI occasions. Frameworks corresponding to LangGraph, CrewAI, and Mastra already emit the spec’s 16 occasion sorts, so groups can swap back-end brokers whereas the front-end stays unchanged. This decoupling speeds prototyping throughout domains: tax software program can present optimistic deduction estimates whereas validation runs within the background, and a CRM web page can autofill consumer particulars as an agent returns structured information to a Svelte + Tailwind UI.

AG-UI Dojo

CopilotKit has additionally just lately launched AG-UI Dojo, a “learning-first” suite of minimal, runnable demos that educate and validate AG-UI integrations end-to-end. Every demo features a stay preview, code, and linked docs, protecting six primitives wanted for manufacturing agent UIs: agentic chat (streaming + instrument hooks), human-in-the-loop planning, agentic and tool-based generative UI, shared state, and predictive state updates for real-time collaboration. Groups can use the Dojo as a guidelines to troubleshoot occasion ordering, payload form, and UI–agent state sync earlier than delivery, decreasing integration ambiguity and debugging time.

You’ll be able to mess around with the Dojo right here, Dojo supply code and extra technical particulars on the Dojo can be found within the weblog

Roadmap and Neighborhood Contributions

The public roadmap exhibits the place AG-UI is heading and the place builders can plug in:

- SDK maturity: Ongoing funding in TypeScript and Python SDKs, with enlargement into extra languages.

- Debugging and developer instruments: Higher error dealing with, observability, and lifecycle occasion readability.

- Efficiency and transports: Work on massive payload dealing with and various streaming transports past SSE/WS.

- Pattern apps and playgrounds: The AG-UI Dojo demonstrates constructing blocks for UIs and is increasing with extra patterns.

On the contribution facet, the neighborhood has added integrations, improved SDKs, expanded documentation, and constructed demos. Pull requests throughout frameworks like Mastra, LangGraph, and Pydantic AI have come from each maintainers and exterior contributors. This collaborative mannequin ensures AG-UI is formed by actual developer wants, not simply spec writers.

Abstract

AG-UI is rising because the default interplay protocol for agent UIs. It standardizes the messy center floor between brokers and frontends, making purposes extra responsive, clear, and maintainable.

With first-party integrations throughout standard frameworks, neighborhood contributions shaping the roadmap, and tooling just like the AG-UI Dojo reducing the barrier to entry, the ecosystem is maturing quick.

Launch AG-UI with a single command, select your agent framework, and be prototyping in below 5 minutes.

npx create-ag-ui-app@newest

#then

#For particulars and patterns, see the quickstart weblog: go.copilotkit.ai/ag-ui-cli-blog. FAQs

FAQ 1: What downside does AG-UI clear up?

AG-UI standardizes how brokers talk with consumer interfaces. As an alternative of ad-hoc APIs, it defines a transparent occasion protocol for streaming textual content, instrument calls, state updates, and lifecycle alerts—making interactive UIs simpler to construct and preserve.

FAQ 2: Which frameworks already help AG-UI?

AG-UI has first-party integrations with Mastra, LangGraph, CrewAI, Agno, LlamaIndex, and Pydantic AI. Accomplice integrations embrace CopilotKit on the frontend. Help for AWS Bedrock Brokers, Google ADK, and extra languages like .NET, Go, and Rust is in progress.

FAQ 3: How does AG-UI differ from REST APIs?

REST works for single request–response duties. AG-UI is designed for interactive brokers—it helps streaming output, incremental updates, instrument utilization, and consumer enter throughout a run, which REST can not deal with natively.

FAQ 4: What transports does AG-UI use?

By default, AG-UI runs over HTTP Server-Despatched Occasions (SSE). It additionally helps WebSockets, and the roadmap consists of exploration of other transports for high-performance or binary information use instances.

FAQ 5: How can builders get began with AG-UI?

You’ll be able to set up official SDKs (TypeScript, Python) or use supported frameworks like Mastra or Pydantic AI. The AG-UI Dojo supplies working examples and UI constructing blocks to experiment with occasion streams.

Because of the CopilotKit workforce for the thought management/ Sources for this text. CopilotKit workforce has supported us on this content material/article.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.