State-backed hackers are utilizing Google’s Gemini AI mannequin to help all levels of an assault, from reconnaissance to post-compromise actions.

Unhealthy actors from China (APT31, Temp.HEX), Iran (APT42), North Korea (UNC2970), and Russia used Gemini for goal profiling and open-source intelligence, producing phishing lures, translating textual content, coding, vulnerability testing, and troubleshooting.

Cybercriminals are additionally exhibiting elevated curiosity in AI instruments and companies that would assist in unlawful actions, akin to social engineering ClickFix campaigns.

AI-enhanced malicious exercise

The Google Risk Intelligence Group (GTIG) notes in a report as we speak that APT adversaries use Gemini to help their campaigns “from reconnaissance and phishing lure creation to command and management (C2) improvement and knowledge exfiltration.”

Chinese language menace actors employed an knowledgeable cybersecurity persona to request that Gemini automate vulnerability evaluation and supply focused testing plans within the context of a fabricated situation.

“The PRC-based menace actor fabricated a situation, in a single case trialing Hexstrike MCP tooling, and directing the mannequin to research Distant Code Execution (RCE), WAF bypass methods, and SQL injection take a look at outcomes towards particular US-based targets,” Google says.

One other China-based actor often employed Gemini to repair their code, perform analysis, and supply recommendation on technical capabilities for intrusions.

The Iranian adversary APT42 leveraged Google’s LLM for social engineering campaigns, as a improvement platform to hurry up the creation of tailor-made malicious instruments (debugging, code technology, and researching exploitation methods).

Extra menace actor abuse was noticed for implementing new capabilities into present malware households, together with the CoinBait phishing package and the HonestCue malware downloader and launcher.

GTIG notes that no main breakthroughs have occurred in that respect, although the tech large expects malware operators to proceed to combine AI capabilities into their toolsets.

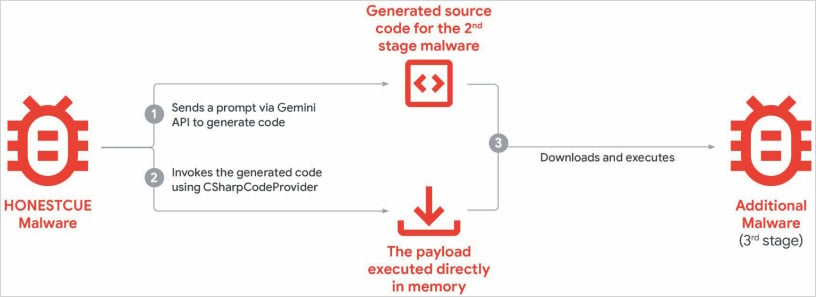

HonestCue is a proof-of-concept malware framework noticed in late 2025 that makes use of the Gemini API to generate C# code for second-stage malware, then compiles and executes the payloads in reminiscence.

Supply: Google

CoinBait is a React SPA-wrapped phishing package masquerading as a cryptocurrency change for credential harvesting. It comprises artifacts indicating that its improvement was superior utilizing AI code technology instruments.

One indicator of LLM use is logging messages within the malware supply code that have been prefixed with “Analytics:,” which might assist defenders monitor knowledge exfiltration processes.

Based mostly on the malware samples, GTIG researchers imagine that the malware was created utilizing the Lovable AI platform, because the developer used the Lovable Supabase consumer and lovable.app.

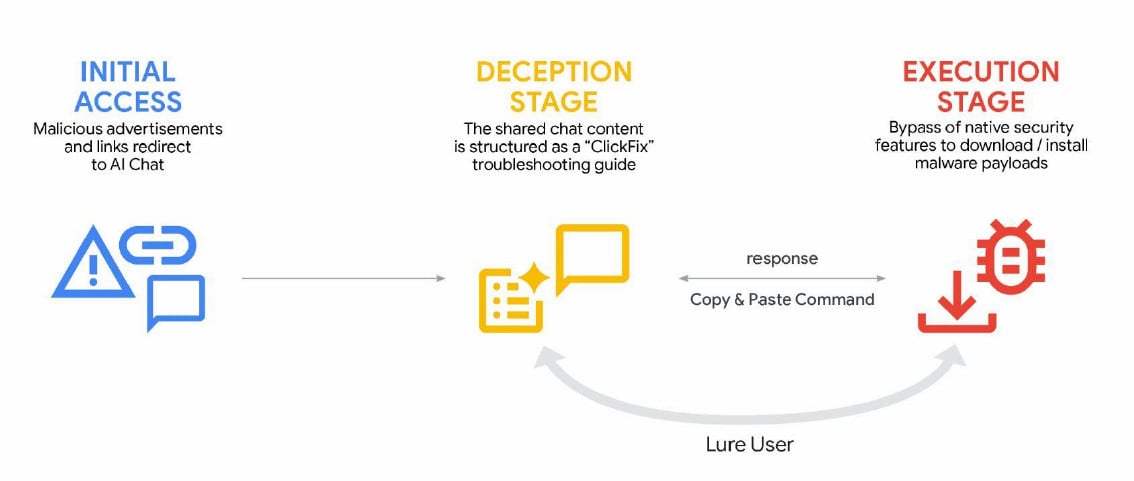

Cybercriminals additionally used generative AI companies in ClickFix campaigns, delivering the AMOS info-stealing malware for macOS. Customers have been lured to execute malicious instructions by malicious advertisements listed in search outcomes for queries on troubleshooting particular points.

supply: Google

The report additional notes that Gemini has confronted AI mannequin extraction and distillation makes an attempt, with organizations leveraging licensed API entry to methodically question the system and reproduce its decision-making processes to copy its performance.

Though the issue isn’t a direct menace to customers of those fashions or their knowledge, it constitutes a big business, aggressive, and mental property downside for the creators of those fashions.

Basically, actors take data obtained from one mannequin and switch the knowledge to a different utilizing a machine studying approach known as “information distillation,” which is used to coach contemporary fashions from extra superior ones.

“Mannequin extraction and subsequent information distillation allow an attacker to speed up AI mannequin improvement shortly and at a considerably decrease value,” GTIG researchers say.

Google flags these assaults as a menace as a result of they represent mental theft, they’re scalable, and severely undermine the enterprise mannequin of AI-as-a-service, which has the potential to affect finish customers quickly.

In a large-scale assault of this type, Gemini AI was focused by 100,000 prompts that posed a sequence of questions geared toward replicating the mannequin’s reasoning throughout a variety of duties in non-English languages.

Google has disabled accounts and infrastructure tied to documented abuse, and has applied focused defenses in Gemini’s classifiers to make abuse more durable.

The corporate assures that it “designs AI techniques with sturdy safety measures and robust security guardrails” and repeatedly checks the fashions to enhance their safety and security.