It’s been slightly over eight years since we first began speaking about Neural Processing Items (NPUs) inside our smartphones and the early prospects of on-device AI. Huge factors for those who do not forget that the HUAWEI Mate 10’s Kirin 970 processor was the primary, although related concepts had been floating round, significantly in imaging, earlier than then.

In fact, lots has modified within the final eight years — Apple has lastly embraced AI, albeit with combined outcomes, and Google has clearly leaned closely into its Tensor Processor Unit for all the pieces from imaging to on-device language translation. Ask any of the large tech corporations, from Arm and Qualcomm to Apple and Samsung, and so they’ll all inform you that AI is the way forward for smartphone {hardware} and software program.

And but the panorama for cellular AI nonetheless feels fairly confined; we’re restricted to a small however rising pool of on-device AI options, curated principally by Google, with little or no in the best way of a artistic developer panorama, and NPUs are partly responsible — not as a result of they’re ineffective, however as a result of they’ve by no means been uncovered as an actual platform. Which begs the query, what precisely is that this silicon sitting in our telephones actually good for?

What’s an NPU anyway?

Robert Triggs / Android Authority

Earlier than we are able to decisively reply whether or not telephones actually “want” an NPU, we must always in all probability acquaint ourselves with what it really does.

Similar to your cellphone’s general-purpose CPU for operating apps, GPU for rendering video games, or its ISP devoted to crunching picture and video knowledge, an NPU is a purpose-built processor for operating AI workloads as shortly and effectively as doable. Easy sufficient.

Particularly, an NPU is designed to deal with smaller knowledge sizes (reminiscent of tiny 4-bit and even 2-bit fashions), particular reminiscence patterns, and extremely parallel mathematical operations, reminiscent of fused multiply-add and fused multiply–accumulate.

Cell NPUs have taken maintain to run AI workloads that conventional processors battle with.

Now, as I mentioned again in 2017, you don’t strictly want an NPU to run machine studying workloads; a number of smaller algorithms can run on even a modest CPU, whereas the info facilities powering varied Massive Language Fashions run on {hardware} that’s nearer to an NVIDIA graphics card than the NPU in your cellphone.

Nevertheless, a devoted NPU might help you run fashions that your CPU or GPU can’t deal with at tempo, and it will possibly usually carry out duties extra effectively. What this heterogeneous strategy to computing can price by way of complexity and silicon space, it will possibly acquire again in energy and efficiency, that are clearly key for smartphones. Nobody needs their cellphone’s AI instruments to eat up their battery.

Wait, however doesn’t AI additionally run on graphics playing cards?

Oliver Cragg / Android Authority

When you’ve been following the ongoing RAM value disaster, you’ll know that AI knowledge facilities and the demand for highly effective AI and GPU accelerators, significantly these from NVIDIA, are driving the shortages.

What makes NVIDIA’s CUDA structure so efficient for AI workloads (in addition to graphics) is that it’s massively parallelized, with tensor cores that deal with extremely fused multiply–accumulate (MMA) operations throughout a variety of matrix and knowledge codecs, together with the tiny bit-depths used for contemporary quantized fashions.

Whereas trendy cellular GPUs, like Arm’s Mali and Qualcomm’s Adreno lineup, can help 16-bit and more and more 8-bit knowledge varieties with extremely parallel math, they don’t execute very small, closely quantized fashions — reminiscent of INT4 or decrease — with wherever close to the identical effectivity. Likewise, regardless of supporting these codecs on paper and providing substantial parallelism, they aren’t optimized for AI as a main workload.

Cell GPUs deal with effectivity; they’re far much less highly effective for AI than desktop rivals.

Not like beefy desktop graphics chips, cellular GPU architectures are designed at first for energy effectivity, utilizing ideas reminiscent of tile-based rendering pipelines and sliced execution models that aren’t completely conducive to sustained, compute-intensive workloads. Cell GPUs can positively carry out AI compute and are fairly good in some conditions, however for extremely specialised operations, there are sometimes extra power-efficient choices.

Software program improvement is the opposite equally essential half of the equation. NVIDIA’s CUDA exposes key architectural attributes to builders, permitting for deep, kernel-level optimizations when operating AI workloads. Cell platforms lack comparable low-level entry for builders and system producers, as an alternative counting on higher-level and sometimes vendor-specific abstractions reminiscent of Qualcomm’s Neural Processing SDK or Arm’s Compute Library.

This highlights a big ache level for the cellular AI improvement atmosphere. Whereas desktop improvement has principally settled on CUDA (although AMD’s ROCm is gaining traction), smartphones run quite a lot of NPU architectures. There’s Google’s proprietary Tensor, Snapdragon Hexagon, Apple’s Neural Engine, and extra, every with its personal capabilities and improvement platforms.

NPUs haven’t solved the platform drawback

Taylor Kerns / Android Authority

Smartphone chipsets that boast NPU capabilities (which is actually all of them) are constructed to unravel one drawback — supporting smaller knowledge values, complicated math, and difficult reminiscence patterns in an environment friendly method with out having to retool GPU architectures. Nevertheless, discrete NPUs introduce new challenges, particularly in the case of third-party improvement.

Whereas APIs and SDKs can be found for Apple, Snapdragon, and MediaTek chips, builders historically needed to construct and optimize their functions individually for every platform. Even Google doesn’t but present simple, basic developer entry for its AI showcase Pixels: the Tensor ML SDK stays in experimental entry, with no assure of basic launch. Builders can experiment with higher-level Gemini Nano options through Google’s ML Package, however that stops properly wanting true, low-level entry to the underlying {hardware}.

Worse, Samsung withdrew help for its Neural SDK altogether, and Google’s extra common Android NNAPI has since been deprecated. The result’s a labyrinth of specs and deserted APIs that make environment friendly third-party cellular AI improvement exceedingly tough. Vendor-specific optimizations had been by no means going to scale, leaving us caught with cloud-based and in-house compact fashions managed by a number of main distributors, reminiscent of Google.

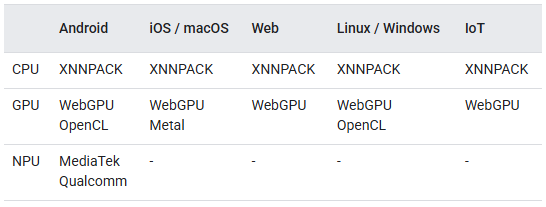

LiteRT runs on-device AI on Android, iOS, Net, IoT, and PC environments.

Fortunately, Google launched LiteRT in 2024 — successfully repositioning TensorFlow Lite — as a single on-device runtime that helps CPU, GPU, and vendor NPUs (at the moment Qualcomm and MediaTek). It was particularly designed to maximise {hardware} acceleration at runtime, leaving the software program to decide on essentially the most appropriate methodology, addressing NNAPI’s greatest flaw. Whereas NNAPI was meant to summary away vendor-specific {hardware}, it finally standardized the interface slightly than the habits, leaving efficiency and reliability to vendor drivers — a spot LiteRT makes an attempt to shut by proudly owning the runtime itself.

Apparently, LiteRT is designed to run inference completely on-device throughout Android, iOS, embedded programs, and even desktop-class environments, signaling Google’s ambition to make it a very cross-platform runtime for compact fashions. Nonetheless, not like desktop AI frameworks or diffusion pipelines that expose dozens of runtime tuning parameters, a TensorFlow Lite mannequin represents a totally specified mannequin, with precision, quantization, and execution constraints determined forward of time so it will possibly run predictably on constrained cellular {hardware}.

Whereas abstracting away the vendor-NPU drawback is a serious perk of LiteRT, it’s nonetheless price contemplating whether or not NPUs will stay as central as they as soon as had been in mild of different trendy developments.

As an example, Arm’s new SME2 exterior extension for its newest C1 sequence of CPUs supplies as much as 4x CPU-side AI acceleration for some workloads, with broad framework help and no want for devoted SDKs. It’s additionally doable that cellular GPU architectures will shift to raised help superior machine studying workloads, probably lowering the necessity for devoted NPUs altogether. Samsung is reportedly exploring its personal GPU structure particularly to raised leverage on-device AI, which might debut as early because the Galaxy S28 sequence. Likewise, Immagination’s E-series is particularly constructed for AI acceleration, debuting help for FP8 and INT8. Possibly Pixel will undertake this chip, ultimately.

LiteRT enhances these developments, liberating builders to fret much less about precisely how the {hardware} market shakes out. The advance of complicated instruction help on CPUs could make them more and more environment friendly instruments for operating machine studying workloads slightly than a fallback. In the meantime, GPUs with superior quantization help would possibly ultimately transfer to turn into the default accelerators as an alternative of NPUs, and LiteRT can deal with the transition. That makes LiteRT really feel nearer to the mobile-side equal of CUDA we’ve been lacking — not as a result of it exposes {hardware}, however as a result of it lastly abstracts it correctly.

Devoted cellular NPUs are unlikely to vanish however apps could lastly begin leveraging them.

Devoted cellular NPUs are unlikely to vanish any time quickly, however the NPU-centric, vendor-locked strategy that outlined the primary wave of on-device AI clearly isn’t the endgame. For many third-party functions, CPUs and GPUs will proceed to shoulder a lot of the sensible workload, significantly as they acquire extra environment friendly help for contemporary machine studying operations. What issues greater than any single block of silicon is the software program layer that decides how — and if — that {hardware} is used.

If LiteRT succeeds, NPUs turn into accelerators slightly than gatekeepers, and on-device cellular AI lastly turns into one thing builders can goal with out betting on a selected chip vendor’s roadmap. With that in thoughts, there’s in all probability nonetheless some method to go earlier than on-device AI has a vibrant ecosystem of third-party options to get pleasure from, however we’re lastly inching slightly bit nearer.

Thanks for being a part of our group. Learn our Remark Coverage earlier than posting.