Most textual content to video fashions generate a single clip from a immediate after which cease. They don’t maintain an inner world state that persists as actions arrive over time. PAN, a brand new mannequin from MBZUAI’s Institute of Basis Fashions, is designed to fill that hole by performing as a common world mannequin that predicts future world states as video, conditioned on historical past and pure language actions.

From video generator to interactive world simulator

PAN is outlined as a common, interactable, lengthy horizon world mannequin. It maintains an inner latent state that represents the present world, then updates that state when it receives a pure language motion equivalent to ‘flip left and velocity up’ or ‘transfer the robotic arm to the crimson block.’ The mannequin then decodes the up to date state into a brief video phase that exhibits the consequence of that motion. This cycle repeats, so the identical world state evolves throughout many steps.

This design permits PAN to help open area, motion conditioned simulation. It may well roll out counterfactual futures for various motion sequences. An exterior agent can question PAN as a simulator, examine predicted futures, and select actions primarily based on these predictions.

GLP structure, separating what occurs from the way it seems

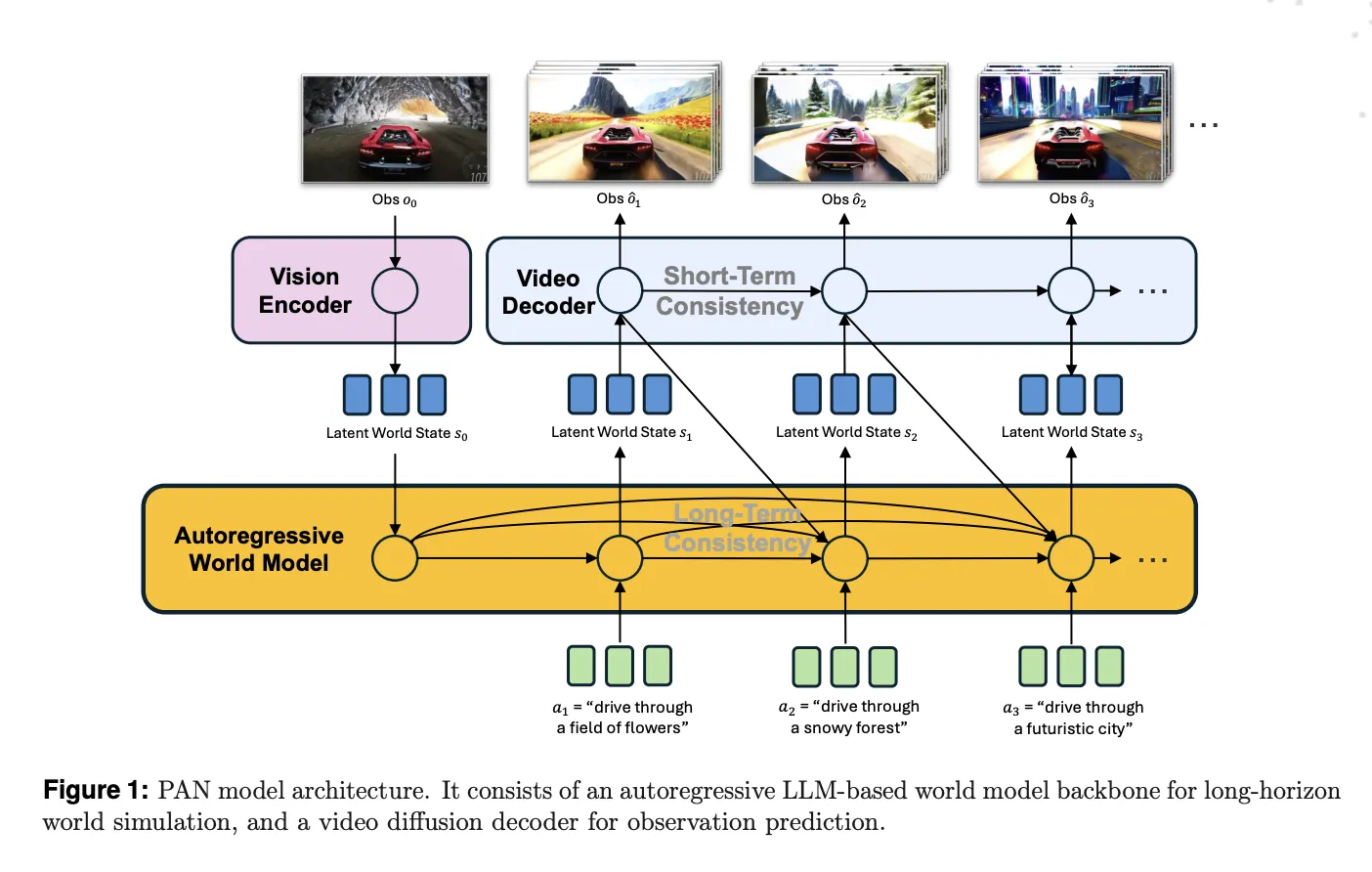

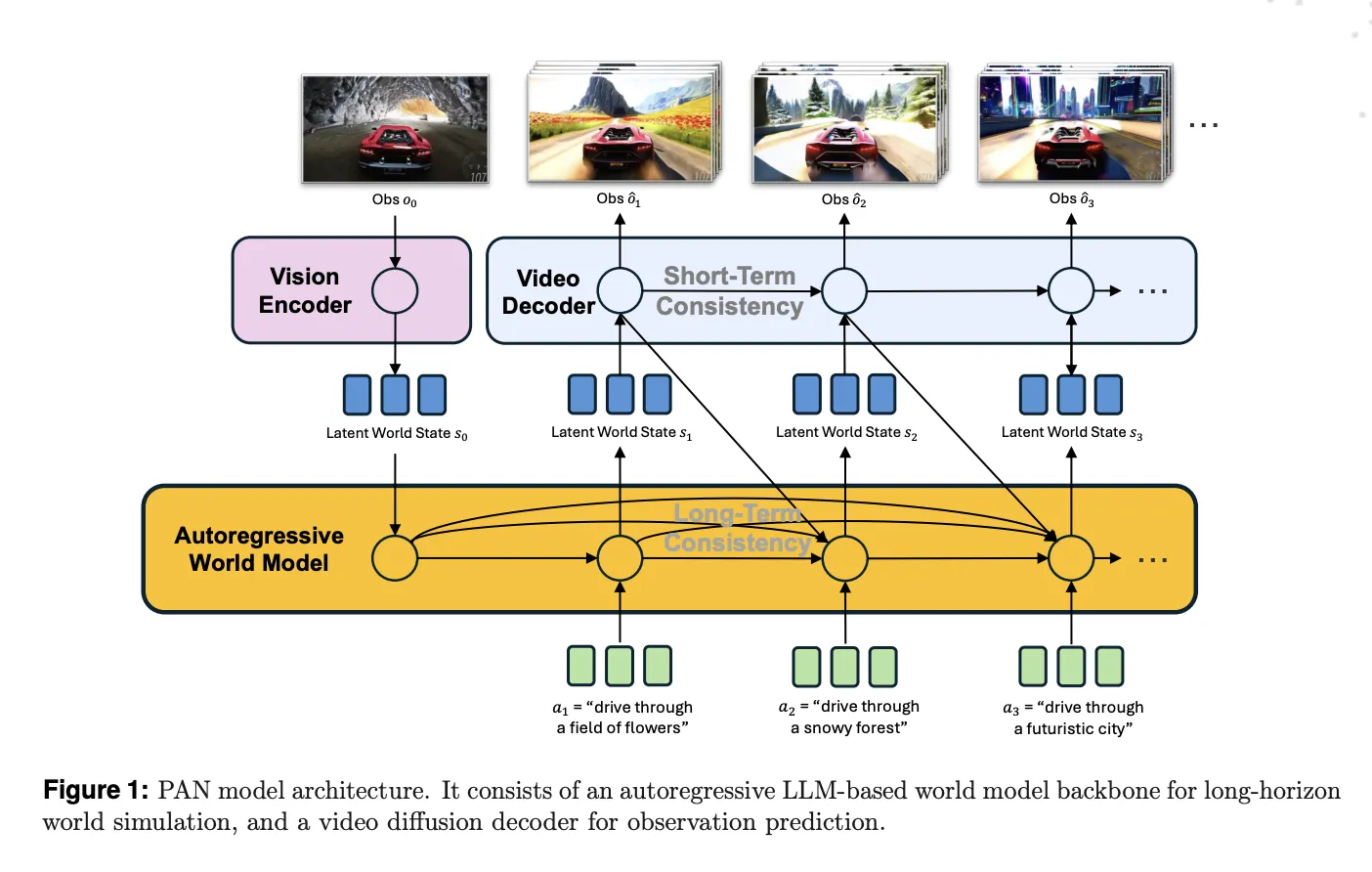

The bottom of PAN is the Generative Latent Prediction, GLP, structure. GLP separates world dynamics from visible rendering. First, a imaginative and prescient encoder maps photos or video frames right into a latent world state. Second, an autoregressive latent dynamics spine primarily based on a big language mannequin predicts the subsequent latent state, conditioned on historical past and the present motion. Third, a video diffusion decoder reconstructs the corresponding video phase from that latent state.

In PAN, the imaginative and prescient encoder and spine are constructed on Qwen2.5-VL-7B-Instruct. The imaginative and prescient tower tokenizes frames into patches and produces structured embeddings. The language spine runs over a historical past of world states and actions, plus realized question tokens, and outputs the latent illustration of the subsequent world state. These latents reside within the shared multimodal house of the VLM, which helps floor the dynamics in each textual content and imaginative and prescient.

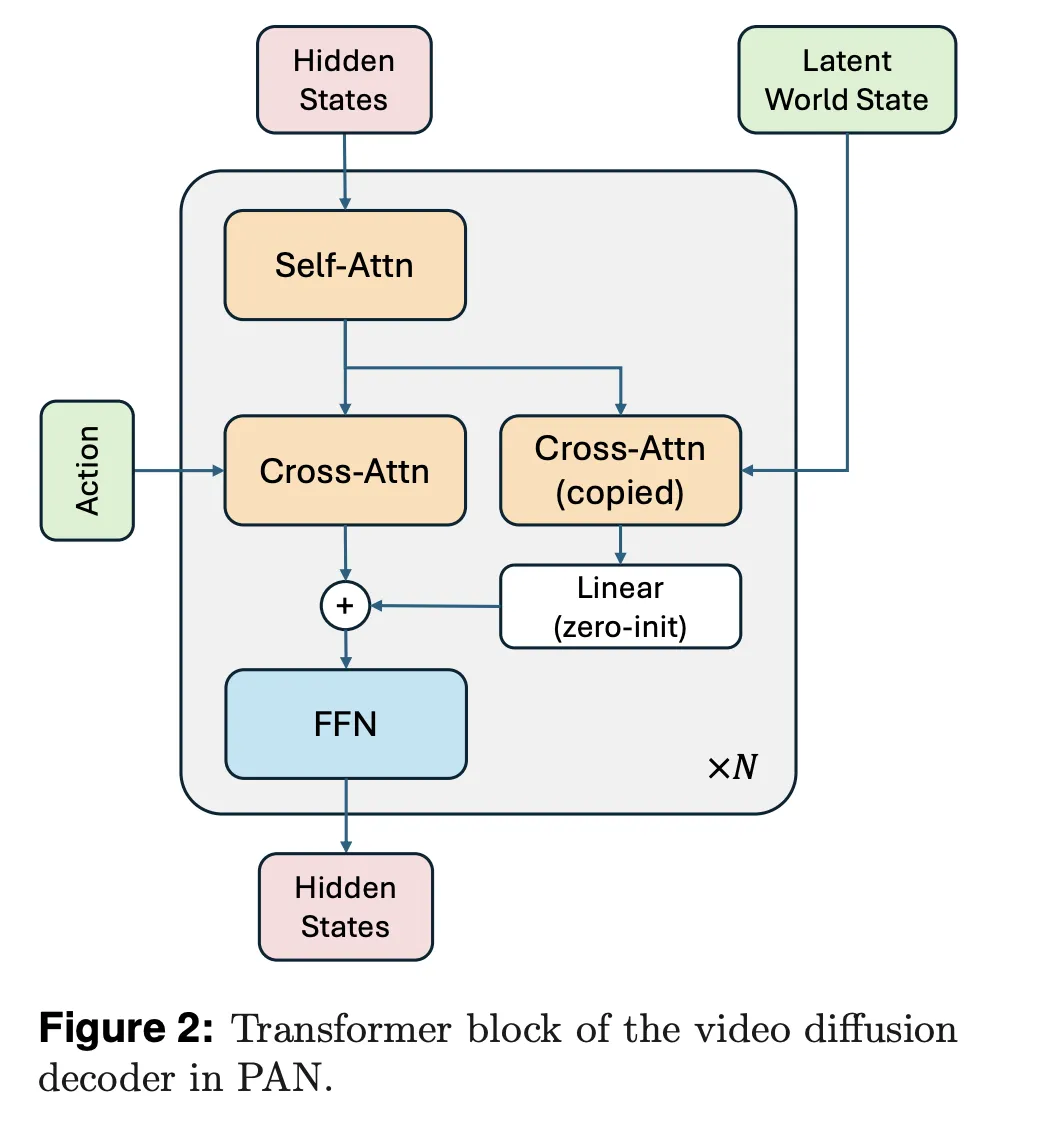

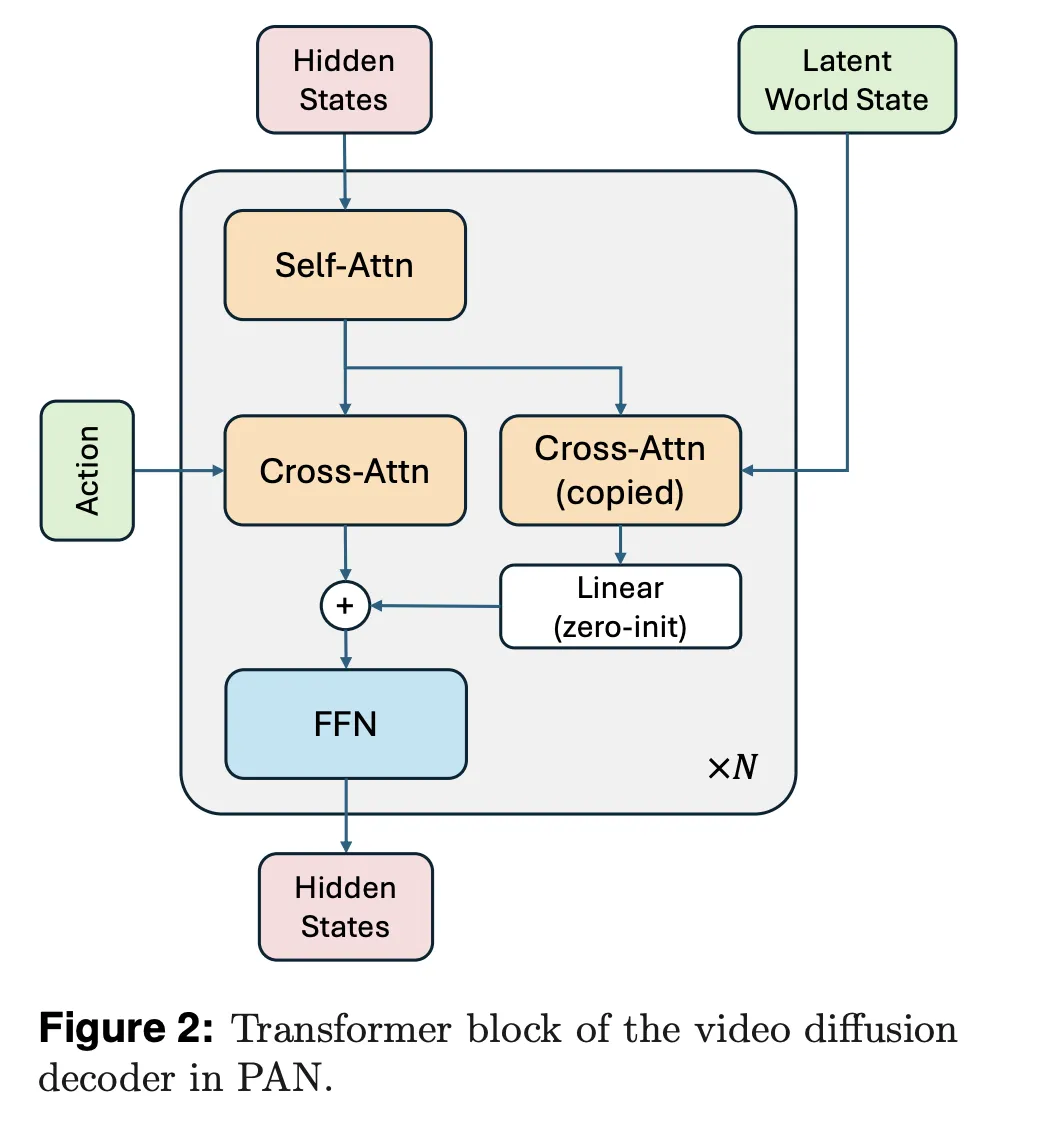

The video diffusion decoder is tailored from Wan2.1-T2V-14B, a diffusion transformer for prime constancy video technology. The analysis staff trains this decoder with a stream matching goal, utilizing one thousand denoising steps and a Rectified Movement formulation. The decoder situations on each the expected latent world state and the present pure language motion, with a devoted cross consideration stream for the world state and one other for the motion textual content.

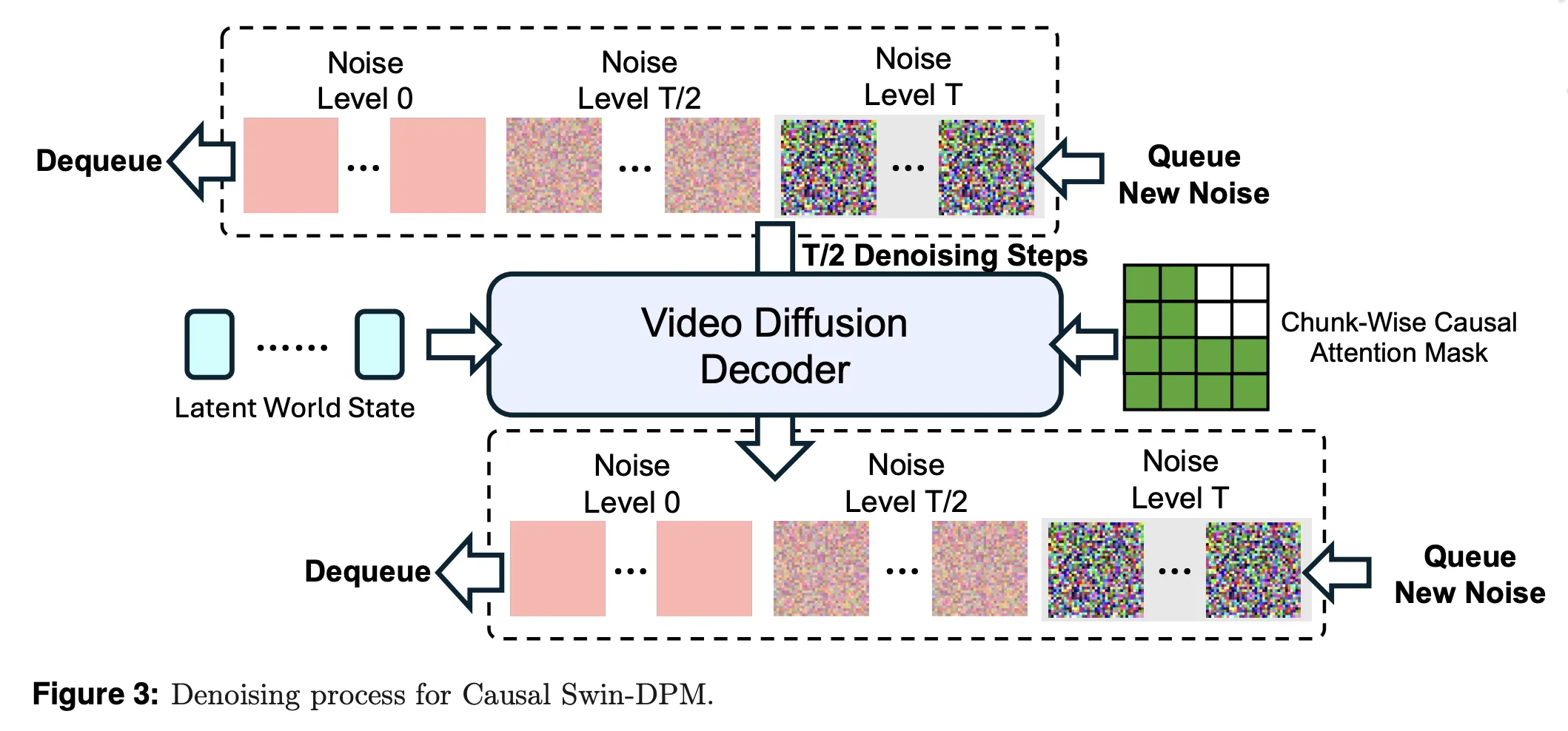

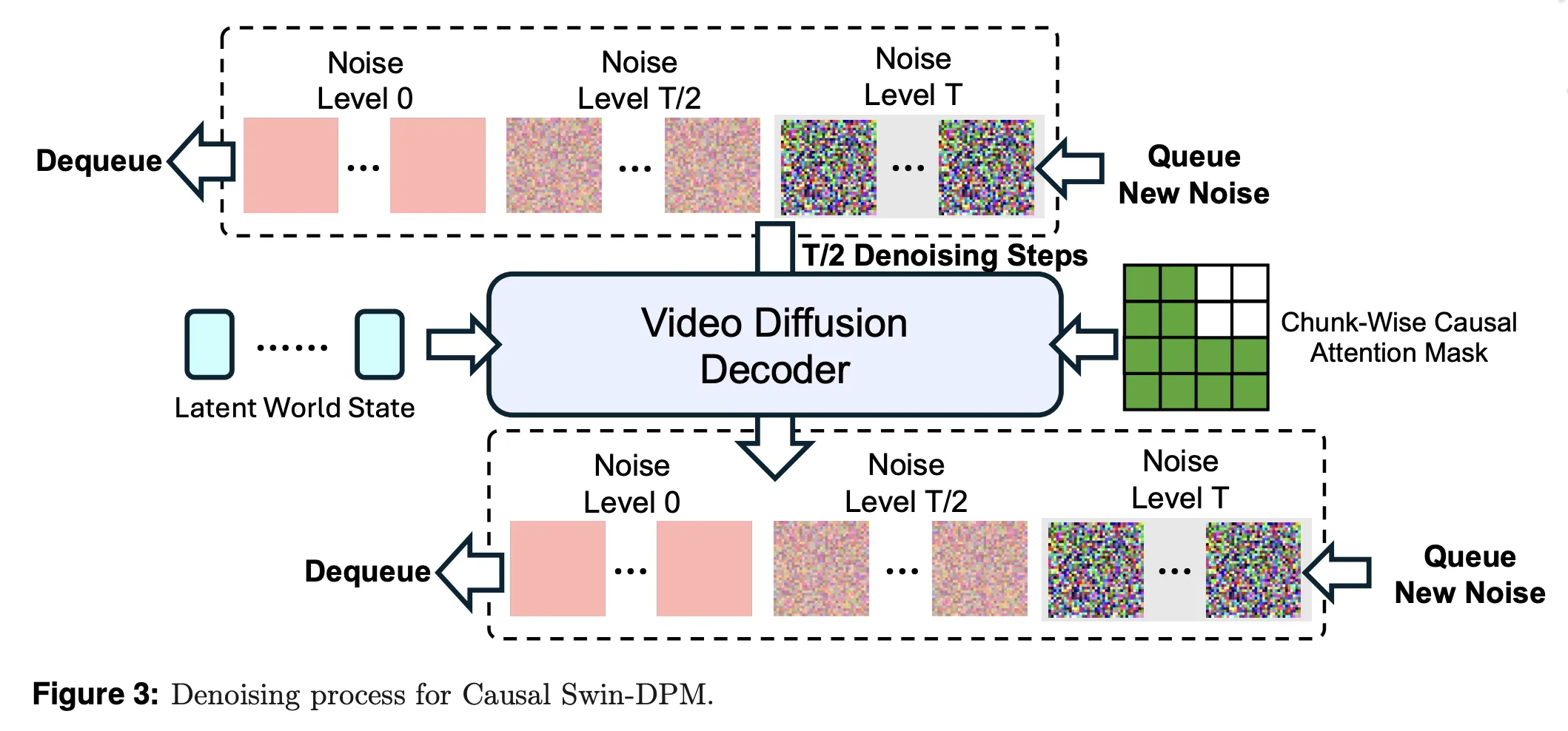

Causal Swin DPM and sliding window diffusion

Naively chaining single shot video fashions by conditioning solely on the final body results in native discontinuities and speedy high quality degradation over lengthy rollouts. PAN addresses this with Causal Swin DPM, which augments the Shift Window Denoising Course of Mannequin with chunk sensible causal consideration.

The decoder operates on a sliding temporal window that holds two chunks of video frames at totally different noise ranges. Throughout denoising, one chunk strikes from excessive noise to scrub frames after which leaves the window. A brand new noisy chunk enters on the different finish. Chunk sensible causal consideration ensures that the later chunk can solely attend to the sooner one, to not unseen future actions. This retains transitions between chunks easy and reduces error accumulation over lengthy horizons.

PAN additionally provides managed noise to the conditioning body, quite than utilizing a wonderfully sharp body. This suppresses incidental pixel particulars that don’t matter for dynamics and encourages the mannequin to give attention to steady construction equivalent to objects and structure.

Coaching stack and information building

PAN is skilled in two phases. Within the first stage, the analysis staff adapts Wan2.1 T2V 14B into the Causal Swin DPM structure. They prepare the decoder in BFloat16 with AdamW, a cosine schedule, gradient clipping, FlashAttention3 and FlexAttention kernels, and a hybrid sharded information parallel scheme throughout 960 NVIDIA H200 GPUs.

Within the second stage, they combine the frozen Qwen2.5 VL 7B Instruct spine with the video diffusion decoder beneath the GLP goal. The imaginative and prescient language mannequin stays frozen. The mannequin learns question embeddings and the decoder in order that predicted latents and reconstructed movies keep constant. This joint coaching additionally makes use of sequence parallelism and Ulysses model consideration sharding to deal with lengthy context sequences. Early stopping ends coaching after 1 epoch as soon as validation converges, though the schedule permits 5 epochs.

Coaching information comes from extensively used publicly accessible video sources that cowl on a regular basis actions, human object interactions, pure environments, and multi agent situations. Lengthy kind movies are segmented into coherent clips utilizing shot boundary detection. A filtering pipeline removes static or overly dynamic clips, low aesthetic high quality, heavy textual content overlays, and display screen recordings utilizing rule primarily based metrics, pretrained detectors, and a customized VLM filter. The analysis staff then re-captions clips with dense, temporally grounded descriptions that emphasize movement and causal occasions.

Benchmarks, motion constancy, lengthy horizon stability, planning

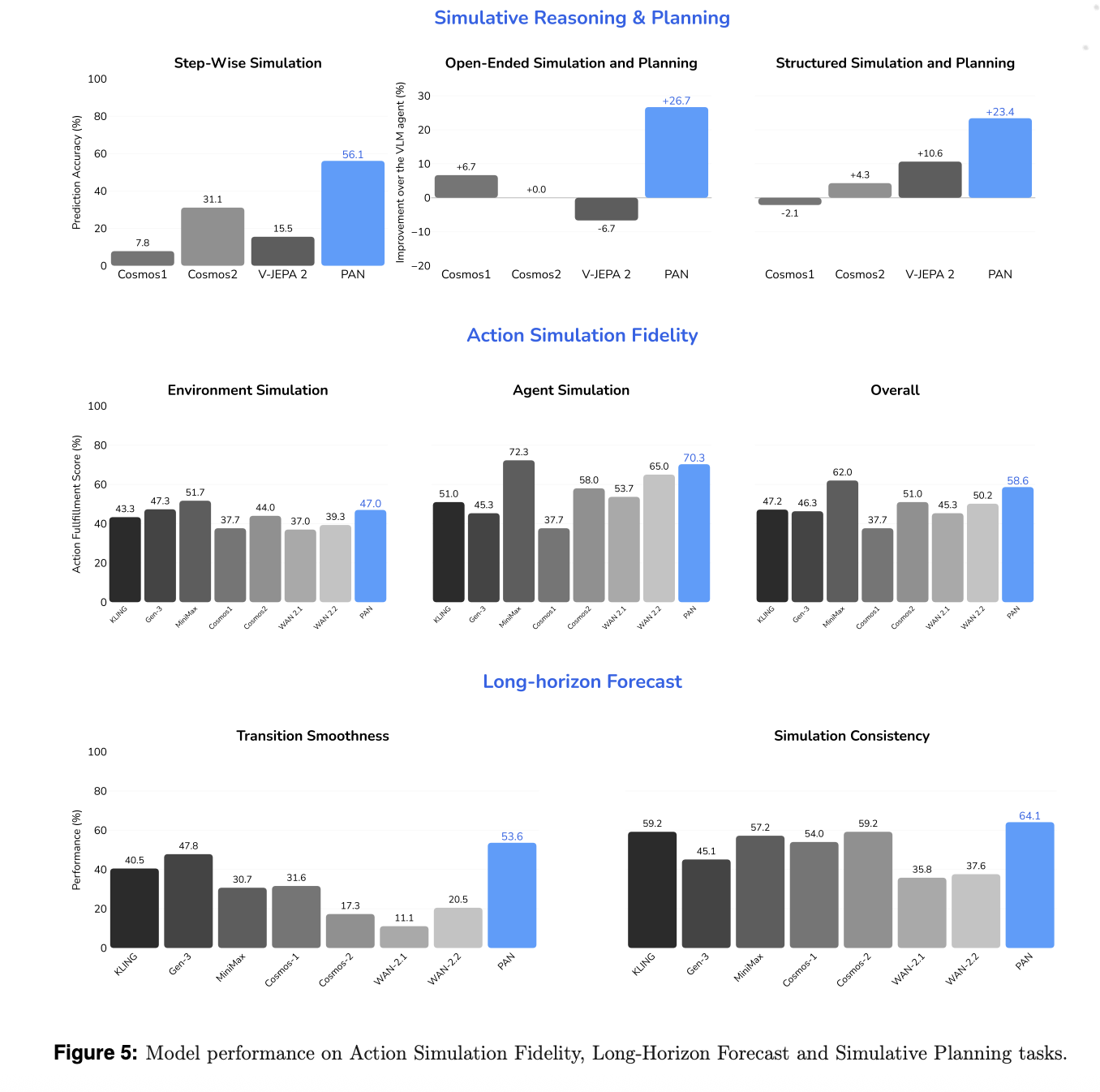

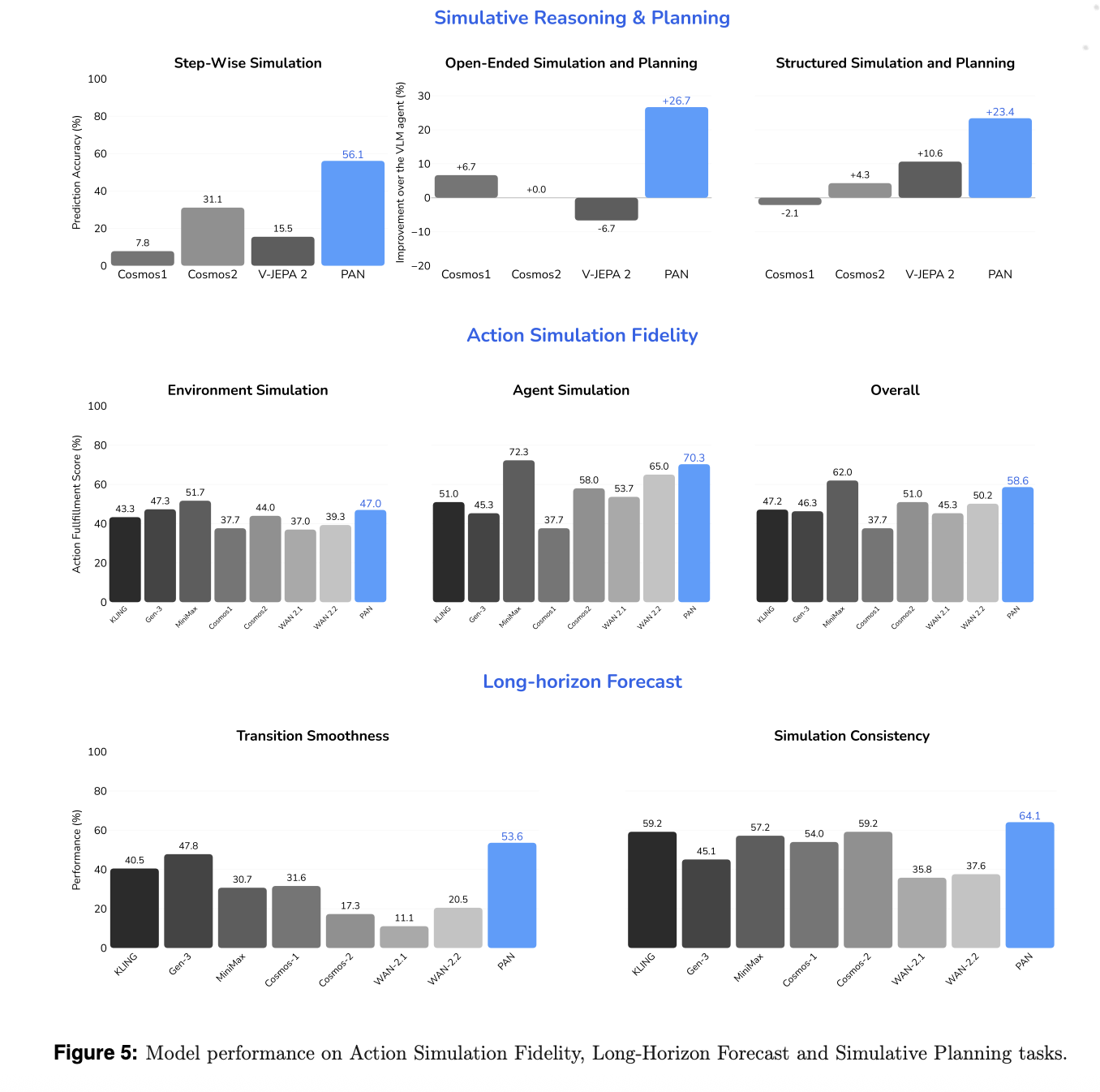

The analysis staff evaluates the mannequin alongside three axes, motion simulation constancy, lengthy horizon forecast, and simulative reasoning and planning, in opposition to each open supply and business video mills and world fashions. Baselines embody WAN 2.1 and a couple of.2, Cosmos 1 and a couple of, V JEPA 2, and business programs equivalent to KLING, MiniMax Hailuo, and Gen 3.

For motion simulation constancy, a VLM primarily based decide scores how effectively the mannequin executes language specified actions whereas sustaining a steady background. PAN reaches 70.3% accuracy on agent simulation and 47% on surroundings simulation, for an general rating of 58.6%. It achieves the very best constancy amongst open supply fashions and surpasses most business baselines.

For lengthy horizon forecast, the analysis staff measures Transition Smoothness and Simulation Consistency. Transition Smoothness makes use of optical stream acceleration to quantify how easy movement is throughout motion boundaries. Simulation Consistency makes use of metrics impressed by WorldScore to watch degradation over prolonged sequences. PAN scores 53.6% on Transition Smoothness and 64.1% on Simulation Consistency and exceeds all baselines, together with KLING and MiniMax, on these metrics.

For simulative reasoning and planning, PAN is used as an inner simulator inside an OpenAI-o3 primarily based agent loop. In step sensible simulation, PAN achieves 56.1% accuracy, the most effective amongst open supply world fashions.

Key Takwaways

- PAN implements the Generative Latent Prediction structure, combining a Qwen2.5-VL-7B primarily based latent dynamics spine with a Wan2.1-T2V-14B primarily based video diffusion decoder, to unify latent world reasoning and lifelike video technology.

- The Causal Swin DPM mechanism introduces a sliding window, chunk sensible causal denoising course of that situations on partially noised previous chunks, which stabilizes lengthy horizon video rollouts and reduces temporal drift in comparison with naive final body conditioning.

- PAN is skilled in two phases, first adapting the Wan2.1 decoder to Causal Swin DPM on 960 NVIDIA H200 GPUs with a stream matching goal, then collectively coaching the GLP stack with a frozen Qwen2.5-VL spine and realized question embeddings plus decoder.

- The coaching corpus consists of huge scale video motion pairs from various domains, processed with segmentation, filtering, and dense temporal recaptioning, enabling PAN to be taught motion conditioned, lengthy vary dynamics as a substitute of remoted brief clips.

- PAN achieves cutting-edge open supply outcomes on motion simulation constancy, lengthy horizon forecasting, and simulative planning, with reported scores equivalent to 70.3% agent simulation, 47% surroundings simulation, 53.6% transition smoothness, and 64.1% simulation consistency, whereas remaining aggressive with main business programs.

Comparability Desk

| Dimension | PAN | Cosmos video2world WFM | Wan2.1 T2V 14B | V JEPA 2 |

|---|---|---|---|---|

| Group | MBZUAI Institute of Basis Fashions | NVIDIA Analysis | Wan AI and Open Laboratory | Meta AI |

| Main position | Basic world mannequin for interactive, lengthy horizon world simulation with pure language actions | World basis mannequin platform for Bodily AI with video to world technology for management and navigation | Top quality textual content to video and picture to video generator for common content material creation and enhancing | Self supervised video mannequin for understanding, prediction and planning duties |

| World mannequin framing | Express GLP world mannequin, latent state, motion, and subsequent remark outlined, focuses on simulative reasoning and planning | Described as world basis mannequin that generates future video worlds from previous video and management immediate, geared toward Bodily AI, robotics, driving, navigation | Framed as video technology mannequin, not primarily as world mannequin, no persistent inner world state described in docs | Joint embedding predictive structure for video, focuses on latent prediction quite than specific generative supervision in remark house |

| Core structure | GLP stack, imaginative and prescient encoder from Qwen2.5 VL 7B, LLM primarily based latent dynamics spine, video diffusion decoder with Causal Swin DPM | Household of diffusion primarily based and autoregressive world fashions, with video2world technology, plus diffusion decoder and immediate upsampler primarily based on a language mannequin | Spatio temporal variational autoencoder and diffusion transformer T2V mannequin at 14 billion parameters, helps a number of generative duties and resolutions | JEPA model encoder plus predictor structure that matches latent representations of consecutive video observations |

| Spine and latent house | Multimodal latent house from Qwen2.5 VL 7B, used each for encoding observations and for autoregressive latent prediction beneath actions | Token primarily based video2world mannequin with textual content immediate conditioning and optionally available diffusion decoder for refinement, latent house particulars rely upon mannequin variant | Latent house from VAE plus diffusion transformer, pushed primarily by textual content or picture prompts, no specific agent motion sequence interface | Latent house constructed from self supervised video encoder with predictive loss in illustration house, not generative reconstruction loss |

| Motion or management enter | Pure language actions in dialogue format, utilized at each simulation step, mannequin predicts subsequent latent state and decodes video conditioned on motion and historical past | Management enter as textual content immediate and optionally digital camera pose for navigation and downstream duties equivalent to humanoid management and autonomous driving | Textual content prompts and picture inputs for content material management, no specific multi step agent motion interface described as world mannequin management | Doesn’t give attention to pure language actions, used extra as visible illustration and predictor module inside bigger brokers or planners |

| Lengthy horizon design | Causal Swin DPM sliding window diffusion, chunk sensible causal consideration, conditioning on barely noised final body to scale back drift and preserve steady lengthy horizon rollouts | Video2world mannequin generates future video given previous window and immediate, helps navigation and lengthy sequences however the paper doesn’t describe a Causal Swin DPM model mechanism | Can generate a number of seconds at 480 P and 720 P, focuses on visible high quality and movement, lengthy horizon stability is evaluated by Wan Bench however with out specific world state mechanism | Lengthy temporal reasoning comes from predictive latent modeling and self supervised coaching, not from generative video rollouts with specific diffusion home windows |

| Coaching information focus | Massive scale video motion pairs throughout various bodily and embodied domains, with segmentation, filtering and dense temporal recaptioning for motion conditioned dynamics | Mixture of proprietary and public Web movies targeted on Bodily AI classes equivalent to driving, manipulation, human exercise, navigation and nature dynamics, with a devoted curation pipeline | Massive open area video and picture corpora for common visible technology, with Wan Bench analysis prompts, not focused particularly at agent surroundings rollouts | Massive scale unlabelled video information for self supervised illustration studying and prediction, particulars in V JEPA 2 paper |

PAN is a vital step as a result of it operationalizes Generative Latent Prediction with manufacturing scale parts equivalent to Qwen2.5-VL-7B and Wan2.1-T2V-14B, then validates this stack on effectively outlined benchmarks for motion simulation, lengthy horizon forecasting, and simulative planning. The coaching and analysis pipeline is clearly documented by the analysis staff, the metrics are reproducible, and the mannequin is launched inside a clear world modeling framework quite than as an opaque video demo. Total, PAN exhibits how a imaginative and prescient language spine plus diffusion video decoder can operate as a sensible world mannequin as a substitute of a pure generative toy.

Try the Paper, Technical particulars and Venture. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you’ll be able to be a part of us on telegram as effectively.