Tabular information remains to be the place many essential fashions run in manufacturing. Finance, healthcare, power and trade groups work with tables of rows and columns, not photos or lengthy textual content. Prior Labs now extends this area with TabPFN-2.5, a brand new tabular basis mannequin that scales in context studying to 50,000 samples and a pair of,000 options whereas protecting a coaching free workflow.

From TabPFN And TabPFNv2 To TabPFN-2.5

The primary TabPFN confirmed {that a} transformer can study a Bayesian like inference process on artificial tabular duties. It dealt with as much as about 1,000 samples and clear numerical options. TabPFNv2 prolonged this to messy actual world information. It added help for categorical options, lacking values and outliers, and was sensible as much as 10,000 samples and 500 options.

TabPFN-2.5 is the subsequent era on this line. Prior Labs describes it as greatest for datasets with as much as 50,000 samples and a pair of,000 options, which is a 5 occasions enhance in rows and a 4 occasions enhance in columns over TabPFNv2. That offers roughly 20 occasions extra information cells within the supported regime. The mannequin is uncovered by the tabpfn Python bundle and likewise by an API.

| Facet | TabPFN (v1) | TabPFNv2 | TabPFN-2.5 |

|---|---|---|---|

| Max Rows (advisable) | 1,000 | 10,000 | 50,000 |

| Max Options (advisable) | 100 | 500 | 2,000 |

| Supported information varieties | Numeric solely | Combined | Combined |

In Context Studying For Tables

TabPFN-2.5 follows the identical prior information fitted community concept as earlier variations. It’s a transformer based mostly basis mannequin that makes use of in context studying to unravel tabular prediction issues in a ahead go. At coaching time, the mannequin is meta educated on massive artificial distributions of tabular duties. At inference time, you go coaching rows and labels and the take a look at rows collectively. The mannequin runs one ahead go and outputs predictions, so there isn’t a dataset particular gradient descent or hyperparameter search.

Benchmark Outcomes On TabArena And RealCause

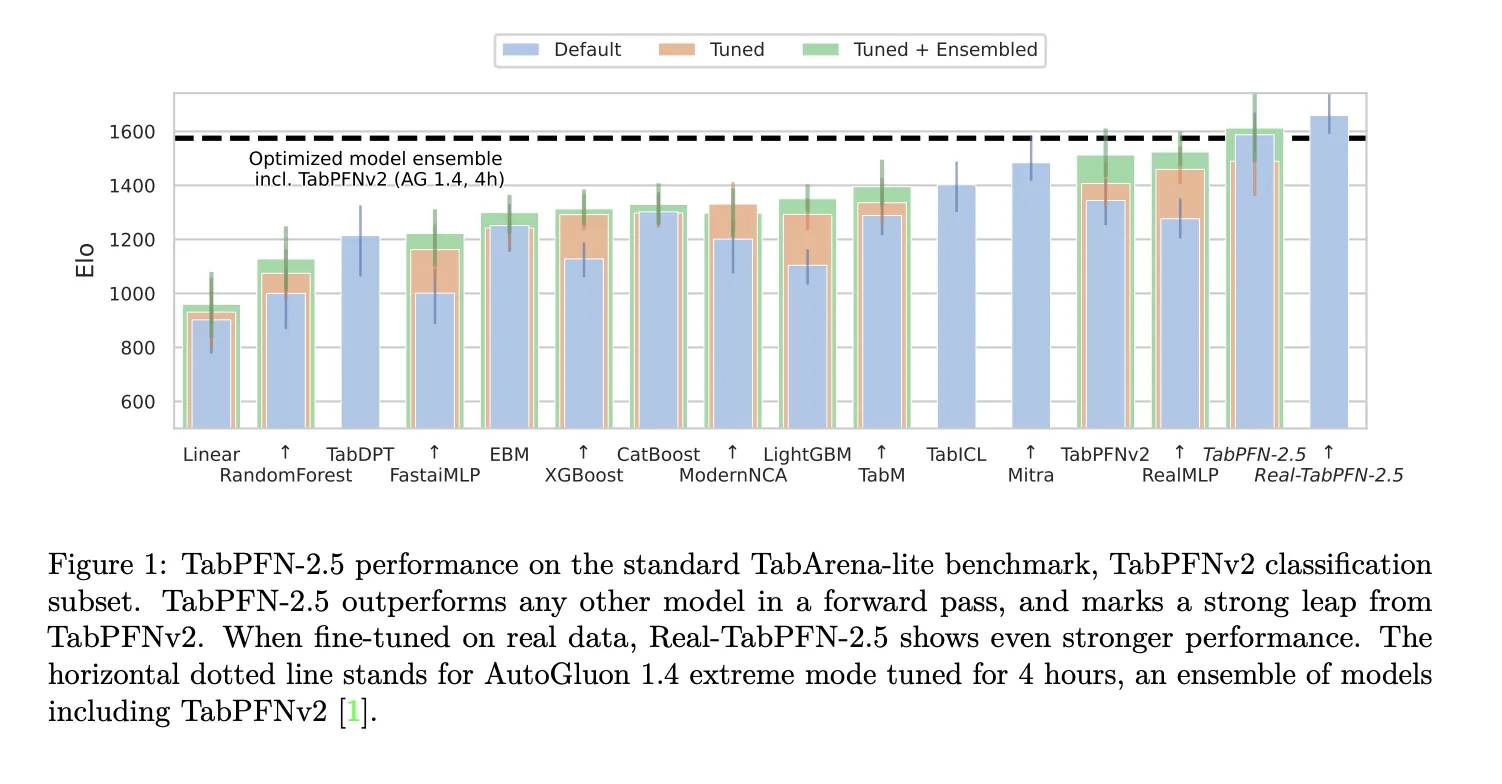

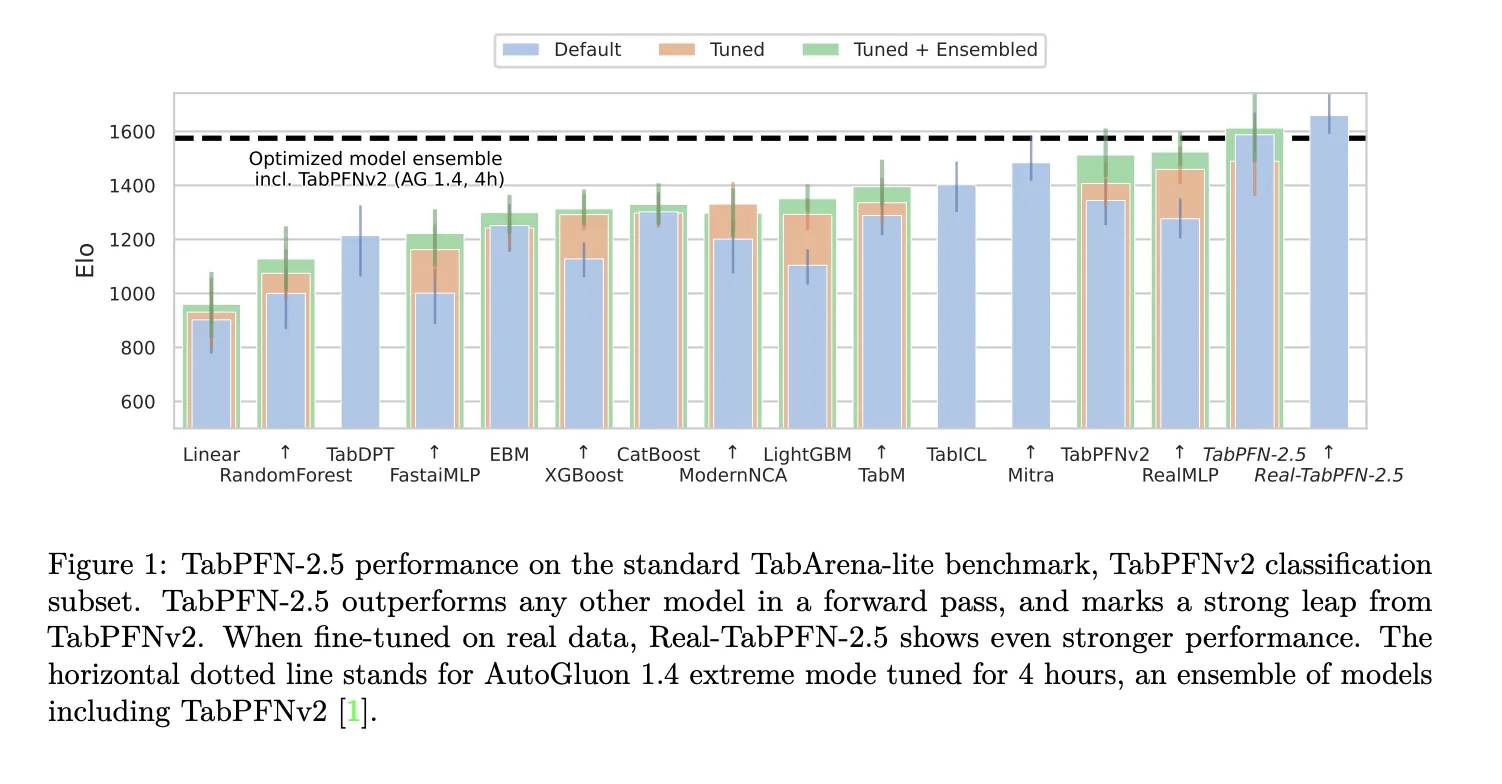

The analysis crew makes use of the TabArena Lite benchmark to measure medium sized duties as much as 10,000 samples and 500 options. TabPFN-2.5 in a ahead go outperforms another mannequin within the comparability. When the Actual-TabPFN-2.5 variant is ok tuned on actual datasets, the lead will increase additional. AutoGluon 1.4 in excessive mode is the baseline ensemble, tuned for 4 hours and even together with TabPFNv2.

On trade commonplace benchmarks with as much as 50,000 information factors and a pair of,000 options, TabPFN-2.5 considerably outperforms tuned tree based mostly fashions similar to XGBoost and CatBoost. On the identical benchmarks it matches the accuracy of AutoGluon 1.4, which runs a fancy 4 hour tuned ensemble that features earlier strategies.

Mannequin Structure And Coaching Setup

The mannequin structure follows TabPFNv2 with alternating consideration and 18 to 24 layers. Alternating consideration signifies that the community attends alongside the pattern axis and alongside the characteristic axis in separate levels, which enforces permutation invariance over rows and columns. This design is essential for tabular information the place the order of rows and the order of columns don’t carry info.

The coaching setup retains the prior information based mostly studying concept. TabPFN-2.5 makes use of artificial tabular duties with completely different priors over features and information distributions as its meta coaching supply. Actual-TabPFN-2.5 makes use of continued pre coaching on a set of actual world tabular datasets from repositories like OpenML and Kaggle, whereas the crew fastidiously avoids overlap with analysis benchmarks.

Key Takeaways

- TabPFN 2.5 scales prior information fitted tabular transformers to about 50,000 samples and a pair of,000 options whereas protecting a one ahead go, no tuning workflow.

- The mannequin is educated on artificial tabular duties and evaluated on TabArena, inner trade benchmarks and RealCause, the place it considerably outperforms tuned tree based mostly baselines and matches AutoGluon 1.4 on benchmarks on this measurement vary.

- TabPFN 2.5 retains the TabPFNv2 fashion alternating consideration transformer for rows and options, which permits permutation invariance over tables and in context studying with out activity particular coaching.

- A distillation engine turns TabPFN 2.5 into compact MLP or tree ensemble college students that protect a lot of the accuracy whereas giving a lot decrease latency and plug in deployment in present tabular stacks.

TabPFN 2.5 is a crucial launch for tabular machine studying as a result of it turns mannequin choice and hyperparameter tuning right into a single ahead go workflow on datasets with as much as 50,000 samples and a pair of,000 options. It combines artificial meta coaching, Actual-TabPFN-2.5 wonderful tuning and a distillation engine into MLP and TreeEns college students, with a transparent non business license and enterprise path. General, this launch makes prior information fitted networks sensible for actual tabular issues.

Take a look at the Paper, Mannequin Weights, Repo and Technical Particulars. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you possibly can be a part of us on telegram as properly.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.