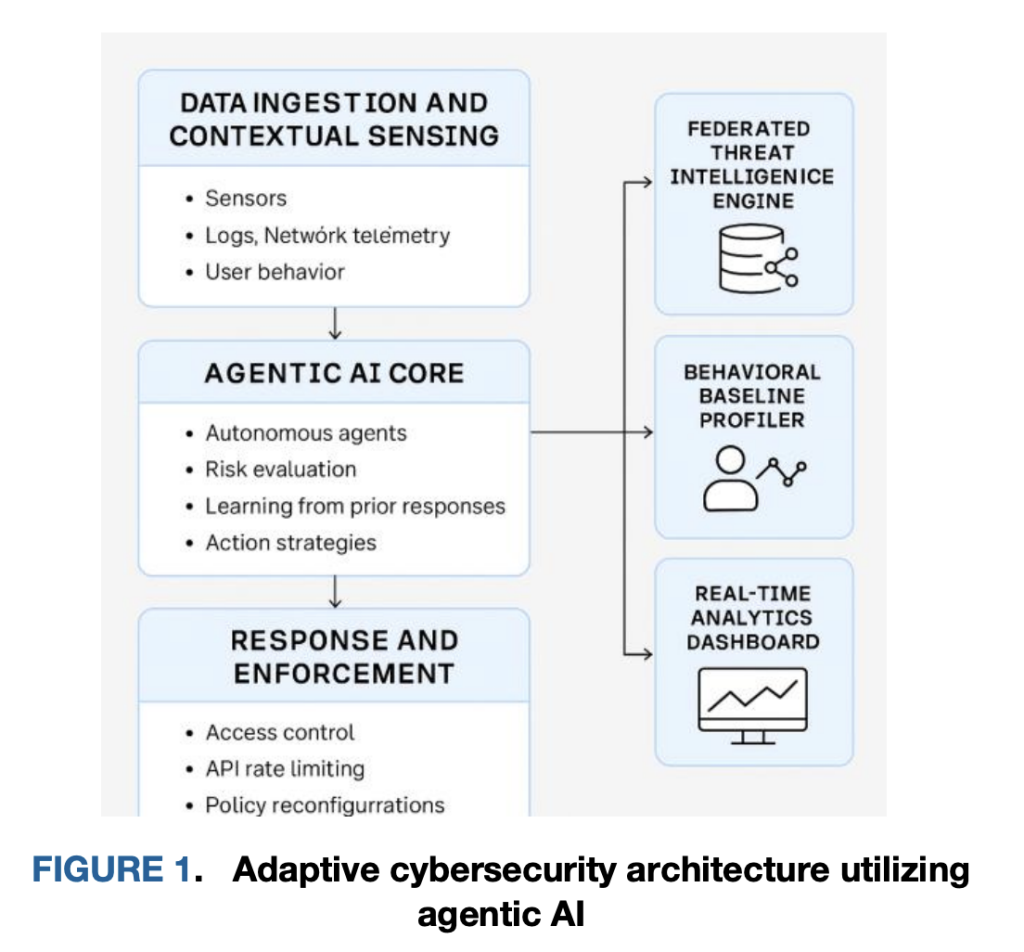

Can your AI safety stack profile, cause, and neutralize a dwell safety menace in ~220 ms—with out a central round-trip? A staff of researchers from Google and College of Arkansas at Little Rock define an agentic cybersecurity “immune system” constructed from light-weight, autonomous sidecar AI brokers colocated with workloads (Kubernetes pods, API gateways, edge providers). As a substitute of exporting uncooked telemetry to a SIEM and ready on batched classifiers, every agent learns native behavioral baselines, evaluates anomalies utilizing federated intelligence, and applies least-privilege mitigations instantly on the level of execution. In a managed cloud-native simulation, this edge-first loop reduce decision-to-mitigation to ~220 ms (≈3.4× sooner than centralized pipelines), achieved F1 ≈ 0.89, and held host overhead beneath 10% CPU/RAM—proof that collapsing detection and enforcement into the workload airplane can ship each pace and constancy with out materials useful resource penalties.

What does “Profile → Purpose → Neutralize” imply on the primitive stage?

Profile. Brokers are deployed as sidecars/daemonsets alongside microservices and API gateways. They construct behavioral fingerprints from execution traces, syscall paths, API name sequences, and inter-service flows. This native baseline adapts to short-lived pods, rolling deploys, and autoscaling—situations that routinely break perimeter controls and static allowlists. Profiling isn’t just a threshold on counts; it retains structural options (order, timing, peer set) that enable detection of zero-day-like deviations. The analysis staff frames this as steady, context-aware baselining throughout ingestion and sensing layers in order that “regular” is realized per workload and per identification boundary.

Purpose. When an anomaly seems (for instance, an uncommon burst of high-entropy uploads from a low-trust principal or a never-seen-before API name graph), the native agent mixes anomaly scores with federated intelligence—shared indicators and mannequin deltas realized by friends—to provide a threat estimate. Reasoning is designed to be edge-first: the agent decides with out a round-trip to a central adjudicator, and the belief determination is steady slightly than a static position gate. This aligns with zero-trust—identification and context are evaluated at every request, not simply at session begin—and it reduces central bottlenecks that add seconds of latency beneath load.

Neutralize. If threat exceeds a context-sensitive threshold, the agent executes an instant native management mapped to least-privilege actions: quarantine the container (pause/isolate), rotate a credential, apply a rate-limit, revoke a token, or tighten a per-route coverage. Enforcement is written again to coverage shops and logged with a human-readable rationale for audit. The quick path right here is the core differentiator: within the reported analysis, the autonomous path triggers in ~220 ms versus ~540–750 ms for centralized ML or firewall replace pipelines, which interprets right into a ~70% latency discount and fewer alternatives for lateral motion throughout the determination window.

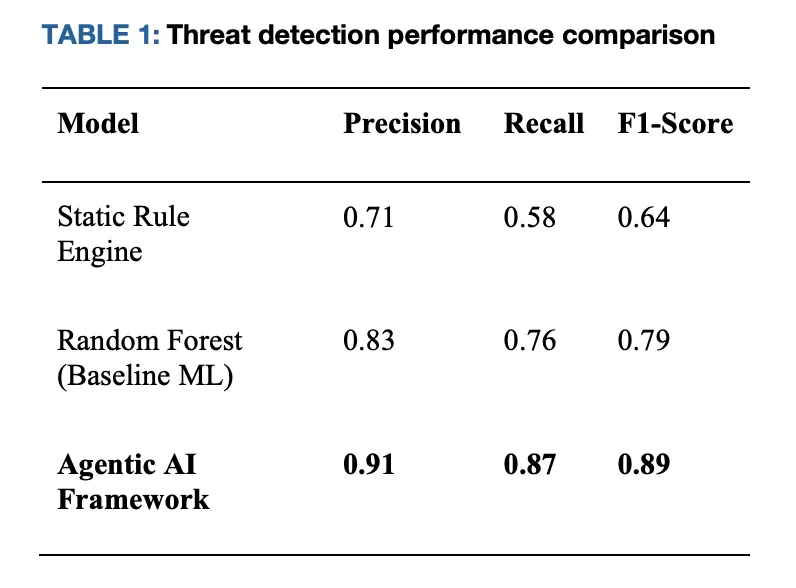

The place do the numbers come from, and what had been the baselines?

The analysis staff evaluated the structure in a Kubernetes-native simulation spanning API abuse and lateral-movement situations. In opposition to two typical baselines—(i) static rule pipelines and (ii) a batch-trained classifier—the agentic method experiences Precision 0.91 / Recall 0.87 / F1 0.89, whereas the baselines land close to F1 0.64 (guidelines) and F1 0.79 (baseline ML). Determination latency falls to ~220 ms for native enforcement, in contrast with ~540–750 ms for centralized paths that require coordination with a controller or exterior firewall. Useful resource overhead on host providers stays beneath 10% in CPU/RAM.

Why does this matter for zero-trust engineering, not simply analysis graphs?

Zero-trust (ZT) requires steady verification at request-time utilizing identification, system, and context. In apply, many ZT deployments nonetheless defer to central coverage evaluators, in order that they inherit control-plane latency and queueing pathologies beneath load. By shifting threat inference and enforcement to the autonomous edge, the structure turns ZT posture from periodic coverage pulls right into a set of self-contained, repeatedly studying controllers that execute least-privilege adjustments domestically after which synchronize state. That design concurrently reduces imply time-to-contain (MTTC) and retains choices close to the blast radius, which helps when inter-pod hops are measured in milliseconds. The analysis staff additionally formalizes federated sharing to distribute indicators/mannequin deltas with out heavy raw-data motion, which is related for privateness boundaries and multi-tenant SaaS.

How does it combine with present stacks—Kubernetes, APIs, and identification?

Operationally, the brokers are co-located with workloads (sidecar or node daemon). On Kubernetes, they will hook CNI-level telemetry for stream options, container runtime occasions for process-level indicators, and envoy/nginx spans at API gateways for request graphs. For identification, they eat claims out of your IdP and compute steady belief scores that issue latest habits and surroundings (e.g., geo-risk, system posture). Mitigations are expressed as idempotent primitives—community micro-policy updates, token revocation, per-route quotas—so they’re simple to roll again or tighten incrementally. The structure’s management loop (sense → cause → act → be taught) is strictly feedback-driven and helps each human-in-the-loop (coverage home windows, approval gates for high-blast-radius adjustments) and autonomy for low-impact actions.

What are the governance and security guardrails?

Velocity with out auditability is a non-starter in regulated environments. The analysis staff emphasizes explainable determination logs that seize which indicators and thresholds led to the motion, with signed and versioned coverage/mannequin artifacts. It additionally discusses privacy-preserving modes—holding delicate knowledge native whereas sharing mannequin updates; differentially personal updates are talked about as an possibility in stricter regimes. For security, the system helps override/rollback and staged rollouts (e.g., canarying new mitigation templates in non-critical namespaces). That is in step with broader safety work on threats and guardrails for agentic techniques; in case your org is adopting multi-agent pipelines, cross-check in opposition to present menace fashions for agent autonomy and power use.

How do the reported outcomes translate to manufacturing posture?

The analysis is a 72-hour cloud-native simulation with injected behaviors: API misuse patterns, lateral motion, and zero-day-like deviations. Actual techniques will add messier indicators (e.g., noisy sidecars, multi-cluster networking, blended CNI plugins), which impacts each detection and enforcement timing. That mentioned, the fast-path construction—native determination + native act—is topology-agnostic and may protect order-of-magnitude latency positive factors as long as mitigations are mapped to primitives obtainable in your mesh/runtime. For manufacturing, start with observe-only brokers to construct baselines, then activate mitigations for low-risk actions (quota clamps, token revokes), then gate high-blast-radius controls (community slicing, container quarantine) behind coverage home windows till confidence/protection metrics are inexperienced.

How does this sit within the broader agentic-security panorama?

There may be rising analysis on securing agent techniques and utilizing agent workflows for safety duties. The analysis staff mentioned right here is about protection through agent autonomy near workloads. In parallel, different work tackles menace modeling for agentic AI, safe A2A protocol utilization, and agentic vulnerability testing. If you happen to undertake the structure, pair it with a present agent-security menace mannequin and a take a look at harness that workout routines tool-use boundaries and reminiscence security of brokers.

Comparative Outcomes (Kubernetes simulation)

| Metric | Static guidelines pipeline | Baseline ML (batch classifier) | Agentic framework (edge autonomy) |

|---|---|---|---|

| Precision | 0.71 | 0.83 | 0.91 |

| Recall | 0.58 | 0.76 | 0.87 |

| F1 | 0.64 | 0.79 | 0.89 |

| Determination-to-mitigation latency | ~750 ms | ~540 ms | ~220 ms |

| Host overhead (CPU/RAM) | Reasonable | Reasonable | <10% |

Key Takeaways

- Edge-first “cybersecurity immune system.” Light-weight sidecar/daemon AI brokers colocated with workloads (Kubernetes pods, API gateways) be taught behavioral fingerprints, determine domestically, and implement least-privilege mitigations with out SIEM round-trips.

- Measured efficiency. Reported decision-to-mitigation is ~220 ms—about 3.4× sooner than centralized pipelines (≈540–750 ms)—with F1 ≈ 0.89 (P≈0.91, R≈0.87) in a Kubernetes simulation.

- Low operational price. Host overhead stays <10% CPU/RAM, making the method sensible for microservices and edge nodes.

- Profile → Purpose → Neutralize loop. Brokers repeatedly baseline regular exercise (profile), fuse native indicators with federated intelligence for threat scoring (cause), and apply instant, reversible controls reminiscent of container quarantine, token rotation, and rate-limits (neutralize).

- Zero-trust alignment. Selections are steady and context-aware (identification, system, geo, workload), changing static position gates and decreasing dwell time and lateral motion threat.

- Governance and security. Actions are logged with explainable rationales; insurance policies/fashions are signed and versioned; high-blast-radius mitigations could be gated behind human-in-the-loop and staged rollouts.

Abstract

Deal with protection as a distributed management airplane fabricated from profiling, reasoning, and neutralizing brokers that act the place the menace lives. The reported profile—~220 ms actions, ≈ 3.4× sooner than centralized baselines, F1 ≈ 0.89, <10% overhead—is in step with what you’d anticipate once you remove central hops and let autonomy deal with least-privilege mitigations domestically. It aligns with zero-trust’s steady verification and provides groups a sensible path to self-stabilizing operations: be taught regular, flag deviations with federated context, and include early—earlier than lateral motion outpaces your management airplane.

Take a look at the Paper and GitHub Web page. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Publication.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.